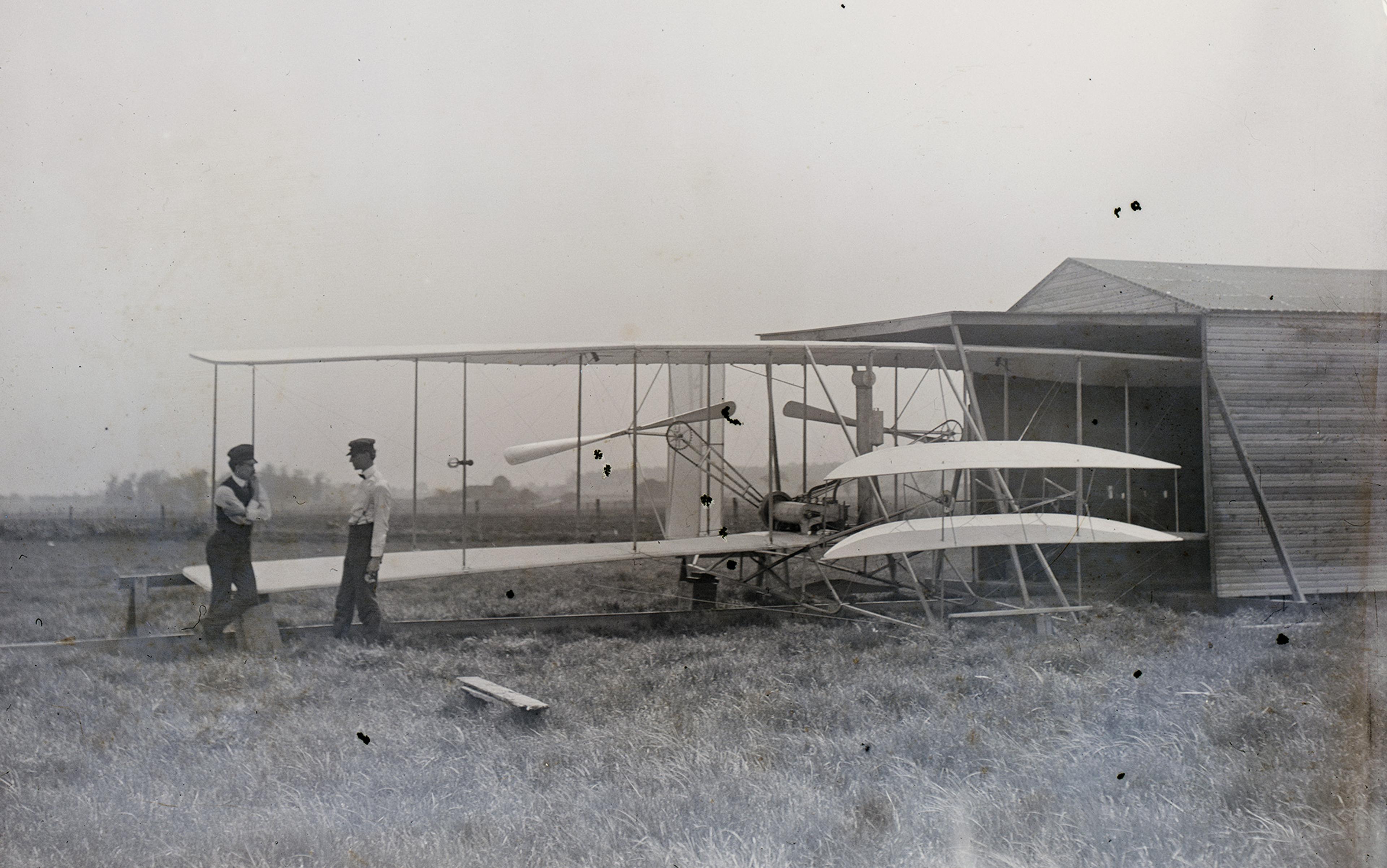

In the town of Dayton, Ohio, at the end of the 19th century, locals were used to the sound of quarrels spilling out from the room above the bicycle store on West Third Street. The brothers Wilbur and Orville Wright opened the shop in 1892, shortly before they became obsessed with the problem of manned flight. Downstairs, they fixed and sold bicycles. Upstairs, they argued about flying machines.

Charles Taylor, who worked on the shop floor of the Wright Cycle Company, described the room above as ‘frightened with argument’. He recalled: ‘The boys were working out a lot of theory in those days, and occasionally they would get into terrific arguments. They’d shout at each other something terrible. I don’t think they really got mad, but they sure got awfully hot.’

We’re so familiar with the fact that the Wright brothers invented the aeroplane that the miraculous nature of their achievement goes unheralded. Wilbur and Orville were not scientists or qualified engineers. They didn’t attend university and they weren’t attached to any corporation. In fact, before their breakthrough, they’d accomplished little of note. So, just how did they come to solve one of the greatest engineering puzzles in history? Their success owes a lot to their talent for productive argument.

The brothers, four years apart, were close. ‘From the time we were little children,’ wrote Wilbur, ‘my brother Orville and myself lived together, worked together, and in fact, thought together.’ But that should not be taken to mean they had the same thoughts; the way they thought together was through argument. It was their father, Milton Wright, who taught them how to argue productively. After the evening meal, Milton would introduce a topic and instruct the boys to debate it as vigorously as possible without being disrespectful. Then he would tell them to change sides and start again. It proved great training. In The Bishop’s Boys (1989), his biography of the brothers, Tom Crouch writes: ‘In time, they would learn to argue in a more effective way, tossing ideas back and forth in a kind of verbal shorthand until a kernel of truth began to emerge.’ After a family friend expressed his discomfort at the way the brothers argued, Wilbur, the elder, explained why arguing was so important to them:

No truth is without some mixture of error, and no error so false but that it possesses no element of truth. If a man is in too big a hurry to give up an error, he is liable to give up some truth with it, and in accepting the arguments of the other man he is sure to get some errors with it. Honest argument is merely a process of mutually picking the beams and motes out of each other’s eyes so both can see clearly…

Wilbur Wright’s description of collaborative intellectual enquiry is one the ancient Greeks would have recognised. Socrates believed that the best way to dispel illusions and identify fallacies was through the exchange of arguments. His took place in the town square of Athens, often with the city’s most respected intellectuals. His favoured technique was to invite someone to say what they believed (about the nature of justice, say, or happiness) before asking them why and how they could be so sure. Eventually, under persistent questioning, the fragility of the intellectual’s initial confidence would be revealed.

Agnes Callard, associate professor of philosophy at the University of Chicago and an expert on the ancient Greeks, says that Socrates was responsible for one of the founding innovations of Western thought: what she calls the ‘adversarial division of epistemic labour’, in which one party’s job is to throw up hypotheses, while the other’s is to knock them down. This is exactly what happens in a modern courtroom as prosecutor and defender cooperate in a quest for justice by ripping each other’s arguments apart. Even though Socrates himself was sceptical of democracy as a form of government, the idea that people with different views can vigorously yet cooperatively disagree is essential to democratic society.

Today, I believe we’re in danger of losing touch with this principle. Open disagreement is associated with personal animus, stress and futility, partly because we see so many toxic fights on social media. Thanks to the popularisation of research into the flaws of human cognition, we’ve also become increasingly aware of how hard it is to argue without ‘biases’, such as the tendency to pick a side and stick to it rather than weighing evidence for different views dispassionately. It can be tempting, then, to avoid open argument altogether, but this merely transforms our differences into sullen resentment, while depriving us of a powerful tool of enquiry. An alternative is to propose that, when debates do take place, they should be fastidiously civil, emotionally detached and unimpeachably rational.

But the Wright brothers didn’t argue politely or dutifully. They took delight in verbal combat, throwing themselves into every battle. ‘Orv’s a good scrapper,’ said Wilbur, fondly. In another letter, Wilbur chastised a friend for being too reasonable: ‘I see you are back to your old trick of giving up before you are half-beaten in an argument,’ he wrote. ‘I felt pretty certain of my own ground but was anticipating the pleasure of a good scrap before the matter was settled.’

The Wrights were on to something. We shouldn’t avoid robust, passionate, biased argument. In fact, under the right conditions, it can be the fastest route to truth. A good scrap can turn our cognitive flaws into collective virtues.

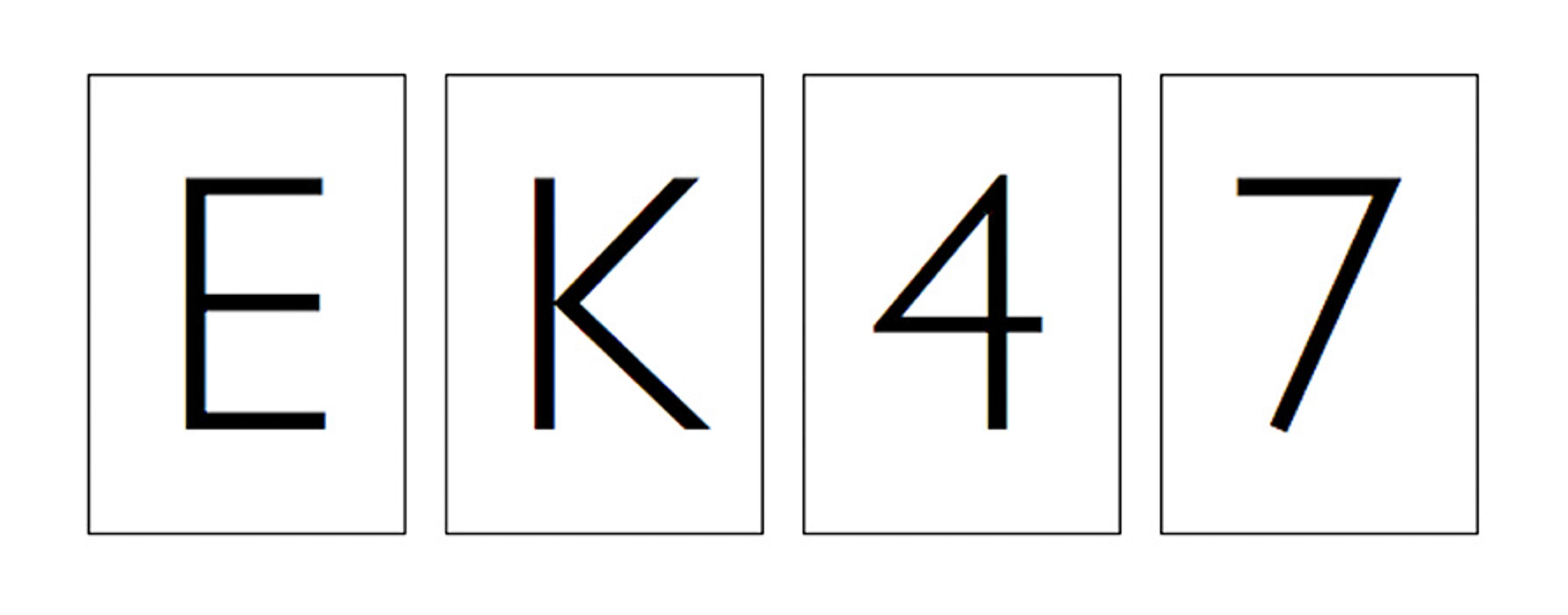

In 1966, the English psychologist Peter Wason introduced a simple experimental design that has since gone on to have an enduring impact on the study of human reasoning. The Wason Selection Task works like this. Imagine these are four cards, flat on the table, each has a number on one side and a letter on the other side:

Your task is to prove the following rule true or false: ‘All cards with a vowel on one side have an even number on the other side.’ Which card or cards do you need to turn over to test this rule?

Take a minute to think about it.

In Wason’s experiment and in numerous replications since, about 80 per cent of people pick two cards: E and 4. That’s the wrong answer. Turning over 4 doesn’t actually tell you anything; the rule doesn’t state that consonants can’t be paired with even numbers. The correct answer is to turn over E and 7, since those are the only two cards that can prove the rule false. If there is an odd number on the other side of E, or a vowel on the other side of 7, then the case is closed.

It might seem like a trivial mistake but, ever since Wason published his results, the fact that most people flunk this task has been taken as powerful evidence that human reasoning has a fundamental flaw: we’re strongly inclined to search for evidence that confirms our beliefs (or in this case, that confirms the supposed rule), rather than to look for ways to disconfirm them. This flaw has since gained a catchy name – confirmation bias – and become one of the most well-evidenced findings in psychology.

It’s been replicated in countless ways. In a landmark 1979 study, psychologists recruited students who had firm views for or against the death penalty, and then presented them with two reports of equally rigorous fictitious studies that seemed either to support or undermine the hypothesis that capital punishment deters crime. Next, the researchers asked the students to assess the robustness of the two studies. As you might have guessed, the students showed favouritism toward the methodology of whichever paper supported their own initial view.

Reasoning makes us smarter only when we practise it with other people in argument

Humans have an instinctive aversion to the possibility of being wrong. Armed with a hypothesis, we bend reality around it, clinging to our opinions even in the face of evidence to the contrary. If I believe that the world is going to hell in a handcart, I’ll notice only bad news and screen out the good. Once I’ve decided that the Moon landings were a hoax, I’ll watch YouTube videos that agree with me and reason away any counterevidence that might cross my path. Intelligence is no protection from confirmation bias, nor is knowledge. In fact, clever and knowledgeable individuals have been shown to be more prone to it, since they’re better at finding reasons to support what they already believe, and more confident in their own mistaken views.

Confirmation bias would seem to be a big problem for our species because it makes us more likely to deceive ourselves about the nature of the world. It also makes us more likely to fall for the lies of those who tell us things we are already predisposed to believe. ‘If one were to attempt to identify a single problematic aspect of human reasoning that deserves attention above all others, the confirmation bias would have to be among the candidates for consideration,’ wrote Raymond Nickerson, a psychologist at Tufts University, in 1998.

This raises a tough question. The ability to reason is meant to be humanity’s supreme attribute, the characteristic that most sets us apart from other animals. Why, then, has evolution endowed us with a tool so faulty that, if you bought it from a shop, you’d send it back? The French evolutionary psychologists Hugo Mercier and Dan Sperber have offered an intriguing answer to this question. If our reasoning capacity is so bad at helping us as individuals figure out the truth, they say, that’s because truth-seeking isn’t its function. Instead, human reason evolved because it helps us to argue more effectively.

Homo sapiens is an intensely collaborative species. Smaller and less powerful than other primates – weedy, compared with our Neanderthal forebears – our human ancestors nevertheless managed to dominate almost any environment they set foot in, mainly because they were so good at banding together to meet their needs. Given the importance of cooperation to our survival, we’ve evolved a finely tuned set of abilities for dealing with each other. In Mercier and Sperber’s view, reasoning is one of those social skills.

In the debate over the nature of human intelligence, Mercier and Sperber are ‘interactionists’, as opposed to ‘intellectualists’. For intellectualists, the purpose of our reasoning capacity is to enable individuals to gain knowledge of the world. In the interactionist view, by contrast, reason didn’t evolve to help individuals reach truths, but to facilitate group communication and cooperation. Reasoning makes us smarter only when we practise it with other people in argument. Socrates was on to something.

In most studies, the Wason Selection Task is completed by individuals working alone. What happens when you ask a group to solve it? That’s what David Moshman and Molly Geil, of the University of Nebraska-Lincoln, wanted to find out. They assigned the task to psychology students, either as individuals, or in groups of half a dozen. As a third condition, some individuals were given the test alone, and then joined a group. The results were dramatic: the success rate of groups was 75 per cent, compared with 9 per cent for individuals.

Ever since Wason himself ran the experiment, it has been repeated many times with many different volunteers, including students from elite universities in the US, and with participants offered a cash bonus for getting the right answer. Yet the results have always been pitiful; rarely did more than 20 per cent of people pick the correct cards. Moshman and Geil vastly improved people’s performance at the task simply by getting them to talk it through. It’s as if a group of high-jumpers discovered that by jumping together they could clear a house.

This is a surprise only if you take the intellectualist perspective. If reasoning is a fundamentally social skill, it’s precisely what we should expect. Moshman and Geil’s analysis of the group discussions further supports the interactionist view. One member of the group would be the first to work out the correct answer, and then the rest of the group would fall into line, but only after a debate. It’s not that one person got to the right answer and everyone immediately agreed. Truth won out only after an exchange of arguments.

The answers that emerge will be stronger for having been forged in the crucible of our disagreement

While humans have accumulated a vast store of collective knowledge, each of us alone knows surprisingly little, and often less than we imagine (for instance, we tend to overestimate our understanding of even mundane items, such as zips, toilets and bicycles). Yet each of us is plugged into a vast intelligence network, which includes the dead as well as the living; the more open and fluid your local network, the smarter you can be. Moreover, open disagreement is one of the main ways we have of raiding other people’s expertise while donating our own to the common pool.

Sperber and Mercier argue that, looked at through the interactionist lens, confirmation bias is actually a feature, not a bug of human cognition. It maximises the contribution that each individual makes to a group, by motivating them to generate new information and new arguments. Think about what it’s like when someone contradicts you. You feel motivated to think of all the reasons you’re right and the other person is wrong, especially if it’s an issue you care about. You might be doing so for selfish or emotional reasons – to justify yourself or prove how smart you are. Even so, you’re helping the group generate a diversity of viewpoints and then select the strongest arguments. When you bring your opinions to the table and I bring mine, and we both feel compelled to make the best case we can, the answers that emerge will be stronger for having been forged in the crucible of our disagreement.

Perhaps, rather than trying to cure the human tendency to make self-centred arguments, we should harness it. This is a principle understood by the world’s greatest investor, Warren Buffett. When a company is considering a takeover bid, it often hires an investment banking firm to advise on the acquisition. But this raises a conflict of interest: the bankers have a strong incentive to persuade the board to do the deal. After all: no deal, no fee. In 2009, Buffett proposed that companies adopt a counterbalancing measure:

It appears to me that there is only one way to get a rational and balanced discussion. Directors should hire a second advisor to make the case against the proposed acquisition, with its fee contingent on the deal not going through.

The genius of this approach lies in the fee. Buffett doesn’t just advise getting a second opinion; he advises giving the second advisor a financial incentive to win the argument. Why? Because by doing so, the directors can harness the power of biased thinking, even as they guard against their own. The second advisor is now strongly motivated to think of as many good reasons as it can that the deal should not go through. The board will then have generated a set of arguments for and a set of arguments against, and be in a stronger position to make the right call.

Incentives don’t have to be financial, of course. Tribalism – the desire to see our group win – is usually portrayed, for good reason, as the enemy of reasoned thought. But it can also be an aid to it. In 2019, a team of scientists led by James Evans, a sociologist at the University of Chicago, published their study of a vast database of disagreements: the edits made to Wikipedia pages. Behind every topic, there is a ‘talk page’ that anyone can open up to observe editors debating their proposed additions and deletions. The researchers used machine learning to identify the political leanings of hundreds of thousands of editors – whether they were ‘red’ or ‘blue’ – based on their edits of political pages. Here’s what they discovered: the more polarised the editorial team, the better the quality of the page they were working on.

Ideologically polarised teams were more competitive – they had more arguments than more homogeneous or ‘moderate’ teams. But their arguments improved the quality of the resulting page. Editors working on one page told the researchers: ‘We have to admit that the position that was echoed at the end of the argument was much stronger and balanced.’ That ‘have to’ is important: the begrudging way that each side came to an agreement made the answer they arrived at stronger than it otherwise would have been. As Evans’s team put it: ‘If they too-easily updated their opinion, then they wouldn’t have been motivated to find counter-factual and counter-data arguments that fuel that conversation.’

Pursuing a strongly held conviction, even when it’s wrong, can still be productive at the group level. It’s an elegant paradox: in order for a group to reach rational conclusions, at least some of its individual members should argue a little irrationally. When everyone feels compelled to generate arguments and knock down competing arguments, the weakest arguments get dismissed while the strongest arguments survive, bolstered with more evidence and better reasons. The result is a deeper and more rigorous process of reasoning than any one person could have carried out alone. Emotion doesn’t hinder this process; it electrifies it. By allowing their arguments to run hot, the Wrights were able to beat all the experts in the world.

It’s pointless having a group of smart people around a table if all they do is nod along with each other

Open and wholehearted argument can raise the collective intelligence of a group, but the chemistry of a disagreement is inherently unstable. There’s always a possibility it might explode into hostile conflict or vaporise into thin air. Self-assertion can turn into aggression, conviction can become stubbornness, the desire to co-operate can become the urge to herd. I’ve sat around tables at work where most people don’t express a strong point of view and simply accept whatever the most confident person in the room says, or just nod along with the first opinion offered because it seems like the nice thing to do. The result is a lifeless discussion in which the dominant view isn’t tested or developed.

I’ve also sat at tables when different individuals fight their corner, sometimes beyond the point that seems reasonable to do so. That kind of debate can be enormously productive; it can also, of course, tip over into an ego battle that generates more heat than light. Over centuries, we’ve developed processes and institutions to stabilise the volatility of disagreement while unlocking its benefits, modern science being the foremost example. It’s also possible to create these conducive conditions ourselves, as the Wikipedians and the Wrights show us.

The first condition, of course, is to openly disagree. The members of the group must bring their own opinions and insights to the table, rather than just adopting those of whomever they like the most or nodding along with the dominant voices in the room. The more diverse the pool of reasons and information, the greater the chance of truly powerful arguments emerging. It’s pointless having a group of smart people around a table if all they do is nod along with each other.

A second condition is that the debate should be allowed to become passionate without becoming a shouting match. How did the Wrights get hot without getting mad? Ivonette Wright Miller, a niece of the brothers, identified a vital ingredient, when she noted that the brothers were adept at ‘arguing and listening’. The tougher that Wilbur and Orville fought, the more intently they listened. Good listening can be a function of close and respectful personal relationships, as in the case of the Wrights, or from tightly structured discussions that force everyone to attend to other viewpoints, as in the case of the Wikipedians.

Third, the members of the group must share a common goal – whether that be solving a puzzle, making a great Wikipedia page, or figuring out how to get a plane in the air and keep it there. If each member is only defending their own position, or trying to get one up on everyone else, then weaker arguments won’t get eliminated and the group won’t make progress. Each one of us should bring our whole, passionate, biased self to the table, while remembering that our ultimate responsibility is to the group. What matters, in the end, is not that I am right but that we are.