When radioactivity was discovered at the end of the 19th century, it surprised scientists. Radioactive decay could transmute one element to another – a conversion previously thought possible only in the dreams of alchemists. In the process, mysterious beams of energy were emitted from the source material, beams that were at first mistaken for a type of light, but soon revealed to be something else altogether. By examining the extent to which these radioactive emissions were able to penetrate other materials, scientists realised that there were different types of decay. Alpha decay resulted in emissions that could be blocked by a thin sheet of paper, whereas gamma decay resulted in emissions that could not be stopped by anything short of a very thick layer of lead.

In terms of penetrating power, a third type of decay, beta decay (or β-decay), was neither exceptionally weak nor exceptionally strong: these emissions could penetrate paper but not sheets of metal. By the early 1900s, scientists knew that beta decay was a process that emitted electrons. But experiments on these emitted electrons raised a mystery of their own. Whereas other types of radioactive emissions always had a discrete amount of energy, which was characteristic of the source material, the electrons that were emitted during β-decay took a continuous range of energy values. This result, which was called the continuous β-spectrum, meant that if you took two electrons that had been emitted from the very same source material during β-decay, one of these electrons sometimes had less energy than the other, and the difference in the amount of energy between the two electrons varied.

Where did the energy that was present in one case, and missing in the other case, go? This was a crucial question because, if it could not be answered, β-decay would involve a violation of the conservation of energy. Some of the greatest experimental physicists of the time revisited this surprising result again and again, but more than 15 years after the continuous β-spectrum was first observed, it still wasn’t clear what explained it.

In early December 1930, Wolfgang Pauli penned an open letter to a group of nuclear physicists who were gathering for a meeting in Tübingen in Germany. Pauli was just 30 years old but already well known for his work on relativity and foundations of quantum theory (including the Pauli exclusion principle). He had been puzzling over the continuous β-spectrum for several years. ‘Dear Radioactive Ladies and Gentlemen,’ his letter began, ‘I have, with respect to … the continuous β-spectrum, hit upon a desperate remedy.’

Pauli’s suggestion was that β-decay produced, in addition to the observed electron, another heretofore unobserved particle. He called this particle the neutron, because it would have neither positive nor negative electric charge. On this model, the amount of energy released by each β-decay event as a whole would be, as expected, discrete. But as that energy could be split in a range of ways between the electron and Pauli’s new particle, the energy of the electron would take a continuous range of values.

This was a truly audacious posit. At the time, the only subatomic particles known to physicists were protons and electrons, and the idea of introducing an additional neutrally charged particle would have been, according to one historian of science, nothing short of ‘abhorrent’. Eugene Wigner, himself a purveyor of perplexing thought experiments in foundations of quantum theory, was reported to have said that his first reaction upon hearing of Pauli’s posit was that it was ‘crazy – but courageous’.

‘I admit,’ Pauli continued in his letter, ‘that my remedy may perhaps appear unlikely from the start, since one probably would long ago have seen the neutrons if they existed.’ The particle in question, he concluded, must have no charge and be very light. These characteristics would allow it to pass through material easily, and thus explain how it was able to evade detection. But what if it turned out that this new particle couldn’t be directly detected at all? In any case, he wrote, ‘nothing ventured, nothing gained.’ He then signed off his letter with apologies for not being in Tübingen in person – there was a ball he needed to attend in Zürich.

As far as historical anecdotes from physics go, this one is especially charming. It’s tempting to imagine Pauli composing the letter in his head while polishing his dancing shoes, reminding himself ‘nothing ventured, nothing gained’ as he headed out to his all-important dance. (One biographer surmises that it was the annual ball hosted by the Society of Italian Students in Zürich, and held at the Baur au Lac, the most distinguished hotel in the city.) Only days before, he had formalised his divorce following a short and ill-fated marriage to a German dancer, who had fallen in love with a chemist instead of her new husband. Both the profession and the mediocre standing of his romantic rival had frustrated Pauli. In one letter he wrote: ‘If it had been a bullfighter – with someone like that I could not have competed – but such an average chemist!’ At any rate, he clearly judged that taking concrete steps to move past his marital failure was more important than appearing in person in Tübingen to explore the possibility of a new particle, however crazy and courageous the latter might be.

Best of all, it turned out that Pauli’s desperate remedy was just the thing. A very small, very light, electrically neutral particle, which is emitted alongside an electron, does in fact explain the continuous β-spectrum. It isn’t the neutron – that name was commandeered by James Chadwick for the much larger neutral particle he discovered as part of the nucleus in 1932. But Pauli’s particle, which came to be called the neutrino, went on to gain widespread acceptance among physicists even though it would escape experimental confirmation until 1956.

As posits go, Pauli’s neutrino was an exceptionally good one. But that became clear only later

To this day, the neutrino remains one of the most mysterious subatomic particles. Tens of trillions of neutrinos from the Sun pass through our bodies every minute, and yet they interact so weakly with matter that physicists have to set up experiments deep in underground mine shafts or buried within the Antarctic ice to try to keep interfering signals to a minimum. The adjective ‘ghostly’ is widely used in popular science descriptions of the neutrino; one article in The New York Times, about the lengths physicists have to go to detect neutrinos, called them ‘aloof’. For a time, physicists thought neutrinos had no mass; now it’s widely agreed that they do, but neutrinos are also so light that no one has yet been able to determine what precisely that mass is. Some contemporary physicists even think that neutrinos are their own antiparticle, and that they might play a role in explaining the high proportion of matter to antimatter in the present-day Universe.

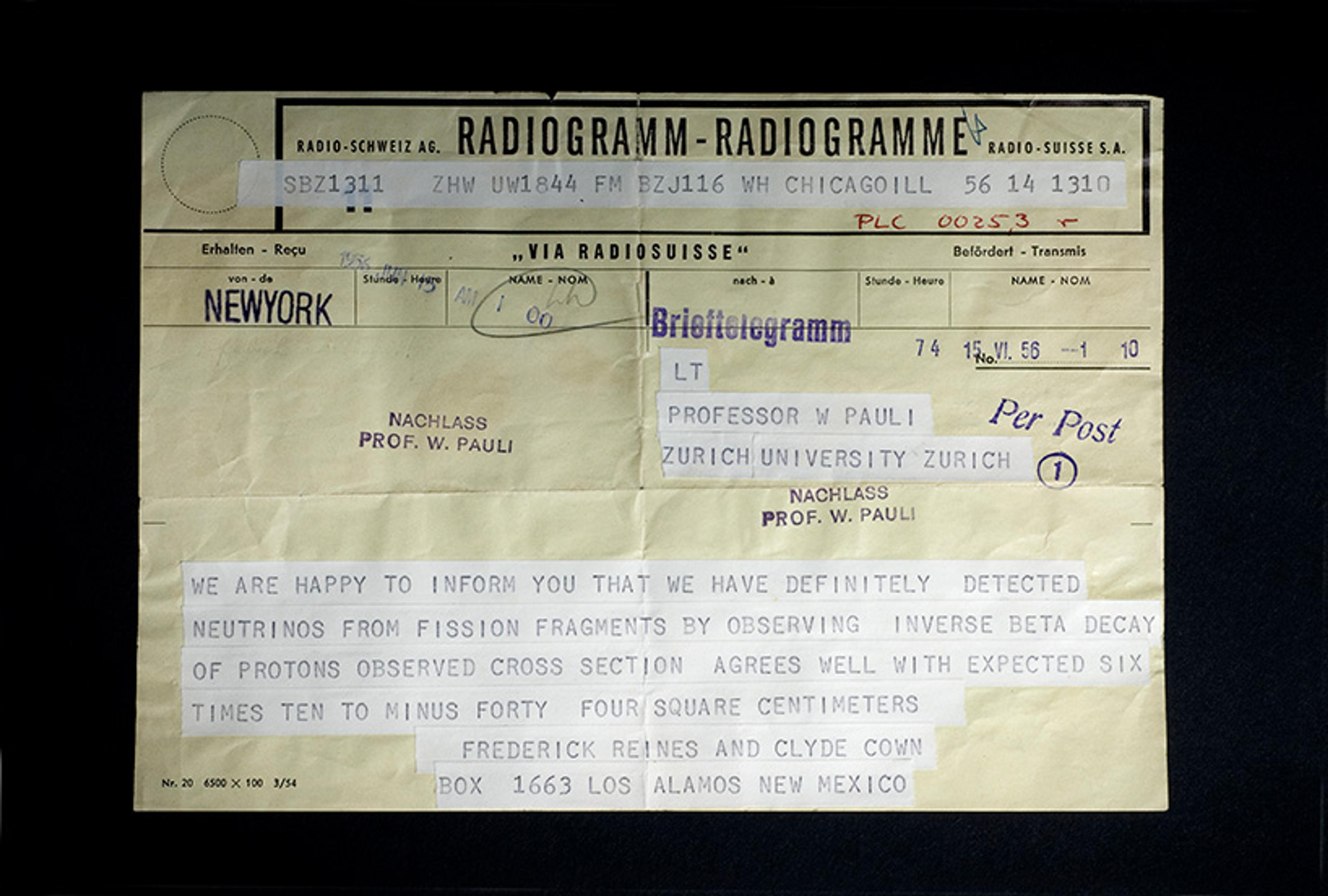

Telegram sent on 14 June 1956 from the physicists Frederick Reines and Clyde Cowan to Wolfgang Pauli announcing the detection, for the first time, of neutrinos. Courtesy and ©2006-2024 CERN

As scientific posits go, then, Pauli’s neutrino was an exceptionally good one. But that became clear only later. When Pauli was writing, the existence of the neutrino was still a crazy, if courageous, hypothesis. So why did he feel justified in making it? Why was Pauli desperate? And why did his proposal count as a remedy?

These are questions for historians of science, but they ought to be of interest to philosophers as well. In particular, they ought to be of interest to philosophers who work on questions about what the world is like – who work, in other words, on metaphysical questions. (‘Metaphysics’ describes a specific subarea of philosophy, but metaphysical questions are also pervasive throughout the field: when ethicists ask ‘What is goodness?’ and political philosophers ask ‘What is race?’ they are asking metaphysical questions.)

Philosophers working on metaphysical questions are well known for their odd and unverifiable (or at least not immediately verifiable) posits. Think of Plato’s realm of the Forms – abstract, perfect, unchanging concepts like Beauty and Justice – or Leibniz’s contention that ours is just one in a vast array of possible worlds. Contemporary philosophers, meanwhile, argue with one another over whether people have temporal parts just as we have spatial parts, whether all material objects have an element of consciousness, and whether numbers are real. It’s easy to be suspicious of these sorts of debates – what, after all, would ever settle them one way or the other? But, as Pauli’s case shows, philosophers aren’t the only ones who put forward odd entities without any clear prospect for experimental detection. Perhaps, then, metaphysicians can learn something from this historical episode about when it makes sense to engage in this kind of speculation, and when we’re best served by keeping our desperate remedies to ourselves.

The suggestion that I am making here is in keeping with a broader approach to metaphysical questions that I call methodological naturalism. These days, the vast majority of philosophers adhere to some variety of naturalism, which is to say that they take science to be a paradigm of successful enquiry into what the world is like. Virtually all contemporary philosophers take it to be obvious that, when we’re making claims about what the world is like, we need to pay attention to and avoid conflicts with our best scientific theories. This is why no one’s a fan any longer of the old Aristotelian view that everything is made of earth, air, fire and water – that view conflicts with chemistry. Similarly, for outdated biological essentialist views about race, which conflict with contemporary genetics. This is what has kept philosophers arguing for nearly a century about whether relativity theory in fact conflicts with certain views about the nature of time. If it does, so much the worse for the philosophers on that side.

The key thing to notice, however, is that there’s more to science than the theories that end up in our textbooks. There’s also the methodology that produces those theories. The methodological naturalist thinks that it’s important for philosophers to take note of this methodology as well. When trying to decide which metaphysical position to accept, we should pay attention not only to any possible conflicts with scientific theories, but also to whether the methodology of science has any bearing on that philosophical debate.

Methodological naturalism gives rise to an immediate objection. Why think that using scientific methodology to inform philosophical questions is going to have any impact? What does the methodology of science have to say about Plato’s Forms or possible worlds or the existence of the number 2? When we think about the methodology of science, the sort of thing that usually comes to mind involves white-coated lab scientists hunched over their benches, geologists examining rock formations in the desert, astronomers peering at the night sky. These sorts of experimental investigation just don’t seem to have any bearing on philosophical questions.

That may be right. But the methodology of science goes beyond experimental techniques. In addition to the empirical aspects of scientific methodology – the aspects that involve collecting data about the world – there are also extra-empirical aspects – aspects that go beyond the data in important ways. It is these extra-empirical aspects of scientific methodology that the methodological naturalist will focus on.

One of the most basic principles is that we cannot leave robust patterns in our observations unexplained

The most well-known example of extra-empirical reasoning in science is Occam’s razor. According to Occam’s razor, we should choose the simplest hypothesis that is compatible with the data. Nothing about the data requires we choose the simplest hypothesis. That’s a commitment, insofar as we hold it, that goes beyond the empirical. And it certainly sounds plausible enough, especially in relatively simple cases – Pauli, for instance, could have posited two little neutral particles to explain β-decay instead of one, but he didn’t. Why not? Because that would add an unnecessary complication.

Unfortunately, few cases are so straightforward. Consider contemporary philosophers who argue for a plurality of possible worlds: every way the world could be, these philosophers say, is a way that some world is. They recognise that they are adding quite a bit of complexity in the sense of introducing many, many more entities than we might otherwise have thought existed. But, they protest, that’s not the kind of complexity that really matters. The plurality of possible worlds allows us to analyse claims about what could have happened or must have happened or would have happened in a straightforward way (what it is for something to be necessary is for it to be the case in every possible world). Without possible worlds, no such analysis is forthcoming. So, it isn’t just that fans of possible worlds have a more complex theory – they have a more complex theory in one way, and a less complex theory in another. They think that, insofar as Occam’s razor is relevant, it pulls in their favour.

What this shows is that, although Occam’s razor is a good illustration of what the methodological naturalist means by an extra-empirical aspect of scientific methodology, it isn’t actually the best test case for this approach, since in most cases the interpretation of this principle is itself fairly complicated. For a better test case, we can go back to the neutrino. Set aside the question of why Pauli posited one particle instead of two, and focus on the question of why Pauli felt the need to posit the neutrino at all. Given what an odd entity it was, given how desperate a remedy, why think it was necessary?

The reason is fairly straightforward. So straightforward, in fact, that it often gets overlooked in discussions of the more nuanced aspects of scientific methodology. Pauli, as he sat down to write his letter to his colleagues in Tübingen, faced a situation that is one of the most common we find in all of science: he had a set of data that exhibited an interesting and unexpected pattern. He knew there had to be some reason why it obtained. If that explanation turned out to involve a weird, aloof little particle, that for all he knew might never admit of direct detection – well, that wasn’t ideal, but there still had to be an explanation. Indeed, one of the most basic principles behind scientific reasoning is that we cannot leave robust patterns in our observations unexplained. When we see a correlation between smoking and lung cancer, we can’t just shrug. When we notice that light emitted by every galaxy we look at through our telescope is redshifted, we can’t just move on to the next experiment. If there’s a surprising pattern in the data, we need to identify some reason why that pattern obtains. Otherwise, we aren’t in good standing as scientists.

The story of how Pauli’s ghostly particle came to be widely accepted by other physicists further buttresses this interpretation. When he first put forward the neutrino as a possible explanation for the continuous β-spectrum, it was not in fact the only explanation available. The alternative, advocated by the prominent Danish physicist Niels Bohr, was to take the conservation of energy to hold merely probabilistically. This was a reasonable hypothesis. For all physicists of the time knew, it might turn out that the conservation of energy was like the second law of thermodynamics: a useful rule of thumb, but subject to counterexample as our experimental techniques got more and more refined. Pauli, however, was adamantly opposed to Bohr’s solution. In his letters to other physicists, he expressed his frank opinion that Bohr, who was both a friend and recipient of a Nobel Prize, was ‘on a completely wrong track’. In one letter directly to Bohr, Pauli asked: ‘Do you intend to mistreat the poor energy law further?’ In another, in which he acknowledged receipt of a manuscript Bohr had sent, Pauli wrote: ‘I must say that your paper has given me little satisfaction.’

Then, in 1933, Charles Drummond Ellis and Nevill Mott released data showing that the continuous β-spectrum had a sharp upper limit. This data, which was confirmed with more precision over the next year, was incompatible with Bohr’s explanation for β-decay in terms of a merely probabilistic conservation of energy law. Soon after, Enrico Fermi put forward a formal theory of β-decay that included Pauli’s neutrinos and, in a letter to Pauli on 15 March 1934, Bohr acknowledged the possibility of ‘the real existence of the neutrino’ for the first time. By 1936, Bohr was admitting that the neutrino hypothesis was probably correct in his own published work.

Introducing odd entities is in good standing as long as we need those entities to explain some sort of pattern

This suggests that Pauli and Bohr shared a core commitment to the principle that one cannot leave robust patterns in the data without an explanation – even if the only way of explaining them is by introducing an unsettling entity such as the neutrino. The key difference between the two was the point at which they recognised that there was no other explanation available besides the neutrino. For Pauli, that seemed obvious as early as 1930. For Bohr (and many others), it wasn’t clear until after the additional data collected by Ellis and Mott ruled out his alternative hypothesis.

Niels Bohr and Wolfgang Pauli (right) at the 1948 Solvay Congress. Courtesy and ©2006-2024 CERN

There are other examples, too, of this kind of explanatory reasoning leading to the introduction of highly surprising, odd or poorly understood entities in physics. Consider the apparently nonlocal gravitational force introduced by Isaac Newton, or the electromagnetic field as introduced by Michael Faraday in 1852. Perhaps my favourite example, though, is dark energy as introduced by cosmologists at the end of the 20th century. In the late 1990s, astronomical observations showed that, not only is the Universe expanding, but the rate at which it is expanding is accelerating. Here’s how the NASA Science website, 25 years later, described the reception of this experimental result: ‘No one expected this, no one knew how to explain it. But something was causing it.’ Indeed, the site goes on to say:

Theorists still don’t know what the correct explanation is, but they have given the solution a name. It is called dark energy. What is dark energy? More is unknown than is known. We know how much dark energy there is because we know how it affects the universe’s expansion. Other than that, it is a complete mystery.

What these examples, taken together, show is that leaving an observed pattern in the data without an explanation is something that physicists are unwilling to do, and this is true even if the only way to explain the pattern is to introduce entities that are novel, weird or poorly understood. This core commitment of scientific methodology doesn’t have a standard name, much less an evocative one like Occam’s razor, but in other work I’ve called this the pattern explanation principle or PEP for short. PEP, on my view, is perhaps the least contentious of the extra-empirical principles that play a role in scientific reasoning. And, indeed, PEP is the way in which odd entities often end up getting introduced into our overall picture of what the world is like. When scientists find themselves in this kind of position, they are more committed to there being something that explains the pattern in question than they are to any particular account of what that entity is or to any previous scruples that they might have had about entities of that type.

Insofar as one is a methodological naturalist, then, one should take PEP seriously when doing philosophy as well as when doing physics. Introducing odd, unverifiable entities in philosophy is in good standing as long as we need those entities in order to explain some sort of pattern in the data that we observe about the world.

Here’s an example of a philosophical debate where PEP has the potential to play an important role. Contemporary metaphysical views about laws of nature tend to break down into two broad camps. On the one hand, there are descriptive accounts of laws, which understand laws as just descriptions of patterns in the data. What it means to say, as Newton did, that It’s a law that F = ma is just that it’s always the case that net force is equal to mass times acceleration. Views in this camp are often labelled ‘Humean’ after the 18th-century Scottish philosopher David Hume, who argued against metaphysical speculation in general, and against necessary connections – including those underwritten by natural laws – in particular. The current most popular descriptive account of laws is David Lewis’s best system analysis, according to which laws are the set of propositions describing the world that best balance simplicity and descriptive strength. Fans of this account sometimes introduce it using the following scenario: suppose God was trying to tell you as much about the world as he could, without overwhelming your meagre human faculties. The laws are the propositions that he would use.

On the other hand, there are governing accounts of laws. On governing accounts, laws don’t just describe the way things are, they make things that way. What it means to say that It’s a law that F = ma is that the reason why net force is always equal to mass times acceleration is because there’s a law making this the case. The trick for these accounts is to say exactly what kinds of things laws are, and how exactly they do their explanatory work. Recent work on these questions has highlighted an important distinction between views on which laws produce later states of affairs from earlier ones, and views on which laws are constraints on spacetime as a whole. But either way, proponents of these views often end up saying that laws are primitive, sui generis entities, which cannot be given any further analysis or understood in terms of more familiar things to which we are already committed.

Pauli didn’t have any idea what a neutrino was, but he still became confident that such things existed

While our best scientific theories certainly refer to laws, they don’t say much about what kinds of entities laws are and, in particular, they don’t take any stand at all on whether laws are merely descriptive, or if they play a full-blown governing role. But the methodological naturalist isn’t just interested in whether a particular metaphysical view is compatible with the content of our best scientific theories. They also want to know how that metaphysical view stands with respect to the methodology that produces our best science.

And, if I’m correct that the pattern explanation principle is one of the core commitments of scientific methodology, then this looks like a pretty clear win for the governing account of laws. After all, we have a number of patterns in our data, for instance the pattern that net force is always equal to mass times acceleration. According to PEP, this pattern requires an explanation. On the governing account of laws, it has one. Maybe we don’t know what laws are. Maybe they are weird or even wholly sui generis entities. But so, too, were the neutrino and the electromagnetic field and dark energy. Physicists still accepted them, because they were needed in order to play an important explanatory role. Philosophers, at least insofar as they are methodological naturalists, should take the same attitude toward governing laws.

Of course, there are things that the defender of a descriptive account of laws can say in response. They might argue that the kinds of comprehensive patterns that laws are supposed to describe are not the kinds of patterns to which PEP applies. (But surely Pauli would not have been satisfied by pointing out that the continuous β-spectrum that had been observed thus far in fact obtained in all cases, observed and unobserved alike. That alone would not have explained why the pattern obtained.) Or fans of the descriptive account might argue that there is something about the mysterious nature of governing laws that blocks them from playing the kind of explanatory role identified by PEP. (But what? Remember, Pauli didn’t really have any idea what a neutrino was, but he still became confident that such things existed.) Or they might try to claim that there is a sense in which merely descriptive laws can play the relevant explanatory role after all.

It’s hard to say whether either of these moves are very promising, but I’m open to seeing how they play out. What I don’t think we should be willing to do is to give up methodological naturalism. After all, what are the alternatives? If you don’t think the methodology of science is a good guide to philosophical theorising, then why do you care about conflicts with our best scientific theories? You might as well let philosophical speculation float free from any grounding in science at all. And if science isn’t relevant to metaphysical questions, then how can philosophers claim to be putting forward theories about what the world is like? Perhaps we can reconceptualise metaphysical theorising as something more like an artistic practice, which expands our imaginative capabilities, or as a purely hypothetical exercise in which merely possible concepts are explored and developed. But both of these moves involve a radical reinterpretation of what many philosophers working on metaphysical questions, both historical and contemporary, think they are doing when they put forward their views.

Of course, as the nuance of the above discussion shows, there’s no way around the fact that methodological naturalism is going to be difficult. Teasing apart the various aspects of scientific methodology, especially the extra-empirical aspects, is something that philosophers of science have long been trying to do without reaching any clear consensus. Indeed, these days much of the emphasis in philosophy of science is on the way in which scientific methodology varies from context to context, and some philosophers have given up on the idea of science having any unified methodology at all. The methodological naturalist will need to wrestle with difficult questions about how these contexts interact, among many others. But no one ever promised that philosophy – and metaphysical theorising in particular – would be easy. And, in any case, as Pauli might say: nothing ventured, nothing gained.