Usually when people imagine a self-aware machine, they picture a device that emerges through deliberate effort and that then makes its presence known quickly, loudly, and (in most scenarios) disastrously. Even if its inventors have the presence of mind not to wire it into the nuclear missile launch system, the artificial intelligence will soon vault past our capacity to understand and control it. If we’re lucky, the new machine will simply break up with us, like the operating system in the movie Her. If not, it might decide not to open the pod bay doors to let us back into the spaceship. Regardless, the key point is that when an artificial intelligence wakes up, we’ll know.

But who’s to say machines don’t already have minds? What if they take unexpected forms, such as networks that have achieved a group-level consciousness? What if artificial intelligence is so unfamiliar that we have a hard time recognising it? Could our machines have become self-aware without our even knowing it? The huge obstacle to addressing such questions is that no one is really sure what consciousness is, let alone whether we’d know it if we saw it. In his 2012 book, Consciousness, the neuroscientist Christof Koch speculated that the web might have achieved sentience, and then posed the essential question: ‘By what signs shall we recognise its consciousness?’

Despite decades of focused effort, computer scientists haven’t managed to build an AI system intentionally, so it can’t be easy. For this reason, even those who fret the most about artificial intelligence, such as University of Oxford philosopher Nick Bostrom, doubt that AI will catch us completely unawares. And yet, there is reason to think that conscious machines might be a byproduct of some other effort altogether. Engineers routinely build technology that behaves in novel ways. Deep-learning systems, neural networks and genetic algorithms train themselves to perform complex tasks rather than follow a predetermined set of instructions. The solutions they come up with are as inscrutable as any biological system. Even a vacuum-cleaning robot’s perambulations across your floor emerge in an organic, often unpredictable way from the interplay of simple reflexes. ‘In theory, these systems could develop – as a way of solving a problem – something that we didn’t explicitly know was going to be conscious,’ says the philosopher Ron Chrisley, of the University of Sussex.

A system might not be able – or want – to participate in the classic appraisals of consciousness such as the Turing test

Even systems that are not designed to be adaptive do things their designers never meant. Computer operating systems and Wall Street stock-trading programs monitor their own activities, giving them a degree of self-awareness. A basic result in computer science is that self-referential systems are inherently unpredictable: a small change can snowball into a vastly different outcome as the system loops back on itself. We already experience this. ‘The instability of computer programs in general, and operating systems in particular, comes out of these Gödelian questions,’ says the MIT physicist Seth Lloyd.

Any intelligence that arises through such a process could be drastically different from our own. Whereas all those Terminator-style stories assume that a sentient machine will see us as a threat, an actual AI might be so alien that it would not see us at all. What we regard as its inputs and outputs might not map neatly to the system’s own sensory modalities. Its inner phenomenal experience could be almost unimaginable in human terms. The philosopher Thomas Nagel’s famous question – ‘What is it like to be a bat?’ – seems tame by comparison. A system might not be able – or want – to participate in the classic appraisals of consciousness such as the Turing test. It might operate on such different timescales or be so profoundly locked-in that, as the MIT cosmologist Max Tegmark has suggested, in effect it occupies a parallel universe governed by its own laws.

The first aliens that human beings encounter will probably not be from some other planet, but of our own creation. We cannot assume that they will contact us first. If we want to find such aliens and understand them, we need to reach out. And to do that we need to go beyond simply trying to build a conscious machine. We need an all-purpose consciousness detector.

You might reasonably worry that scientists could never hope to build such an instrument in their present state of ignorance about consciousness. But ignorance is actually the best reason to try. Even an imperfect test might help researchers to zero in on the causes and meanings of consciousness – and not just in machines but in ourselves, too.

To the extent that scientists comprehend consciousness at all, it is not merely a matter of crossing some threshold of complexity, common though that trope may be in science fiction. Human consciousness involves specific faculties. To identify them, we radiate outward from the one thing we do know: cogito ergo sum. When we ascribe consciousness to other people, we do so because they look like us, act like us, and talk like us. Through music, art, and vivid storytelling, they can evoke their experiences in us. Tests that fall into these three categories – anatomy, behaviour, communication – are used by doctors examining comatose patients, biologists studying animal awareness, and computer scientists designing AI systems.

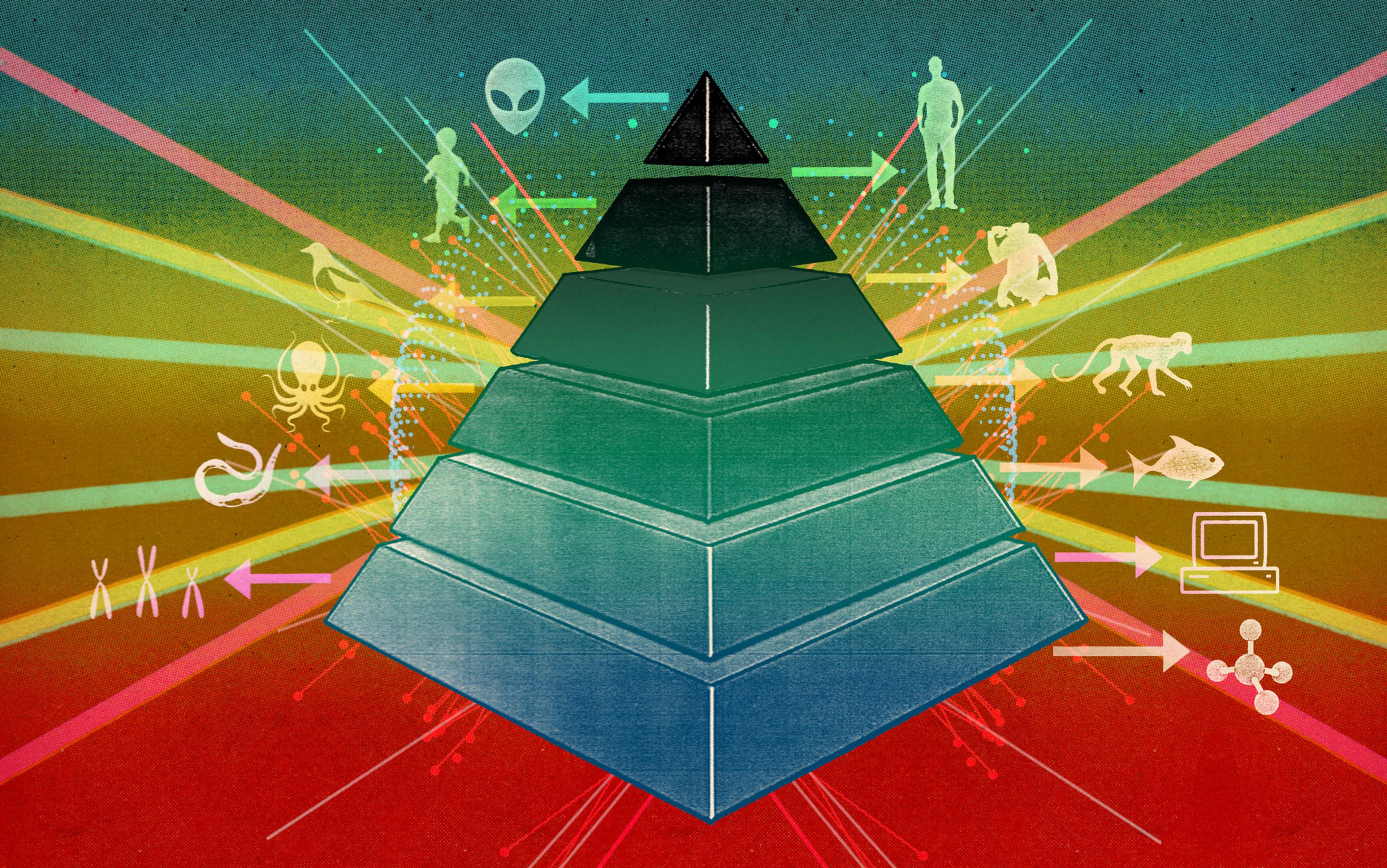

The most systematic effort to piece all the tests together is ‘ConsScale’, a rating procedure developed in 2008 by the Spanish AI researcher Raúl Arrabales Moreno and his colleagues. You fill in a checklist, beginning with anatomical features, on the assumption that human-like consciousness arises only in systems with the right components. Does the system have a body? Memory? Attentional control? Then you look for behaviours and communicativeness: Can it recognise itself in a mirror? Can it empathise? Can it lie?

ConsScale relies on the big and highly contentious hypothesis that consciousness is a continuum

Based on the answers, Arrabales can determine where the system falls on a 12-level scale from ‘dead’ to ‘superhuman’. The scale is designed to recapitulate biology, both phylogeny and ontogeny, from viruses to worms to chimps to human beings, and from newborn babies to toddlers to adults. It also parallels, roughly, human conscious states from deep coma to partial awareness to transcendentally aware monk. At the lowest levels, conscious experience is a coarse sensation of this versus that, as the philosopher David Chalmers has described: ‘We need imagine only something like an unarticulated “flash” of experience, without any concepts, any thought, or any complex processing in the vicinity.’ At the highest level is a richness of experience beyond the normal human capacity. It is achieved, perhaps, by conjoined twins whose brains are autonomous yet linked, so that each has access to the other’s streams of consciousness.

LevelExplanationAnimal ExampleHuman Age EquivalentMachine Example-1DisembodiedBlends into environmentMolecule0IsolatedHas a body, but no functionsInert chromosomeStuffed animal1DecontrolledHas sensors and actuators, but is inactiveCorpsePowered-down computer2ReactiveHas fixed responsesVirusEmbryo to 1 monthELIZA3AdaptiveLearns new reactionsEarthworm1–4 monthsSmart thermostat4AttentionalFocuses selectively, learns by trial-and-error, and forms positive and negative associations (primitive emotions)Fish4–8 monthsCRONOS robot5ExecutiveSelects goals, acts to achieve them, and assesses its own conditionOctupus8–12 monthsCog6EmotionalHas a range of emotions, body schema, and minimal theory of mindMonkey12–18 monthsHaikonen architecture (partly implemented by XCR-1 robot)7Self-ConsciousKnows that it knows (higher-order thought) and passes the mirror testMagpie18–24 monthsNexus-6 (Do Androids Dream of Electric Sheep?)8EmpathicConceives of others as selves and adjusts how it presents itselfChimpanzee2–7 yearsHAL 9000 (2001)9SocialHas full theory of mind, talks, and can lieHuman7–11 yearsAva (Ex Machina)10HumanPasses the Turing Test and creates cumulative

cultureHuman12+ yearsSix (Battlestar Galactica)11Super-ConsciousCoordinates multiple streams of consciousnessBene Gesserit (Dune)augmentedSamantha (Her)

You can use the authors’ online calculator to see where your goldfish and cat fall on the scale. ELIZA, a pioneering computer psychoanalyst created at MIT in the 1960s, comes in at level 2 (‘reactive’). XCR-1, a robot with neuron-like electronic circuitry that can tell you, plaintively, when it’s in pain, built by the roboticist Pentti Haikonen, rates a 6 (‘emotional’). A specialised version of the calculator assesses computer-controlled characters in first-person-shooter video games, which may well be the most sophisticated AI most people typically encounter; let us hope they stay virtual.

ConsScale relies on the big and highly contentious hypothesis that consciousness is a continuum. Many philosophers, such as John Searle of the University of California at Berkeley, recoil from that idea because it implies panpsychism, the belief that everything around us is conscious to some degree. Panpsychism strikes these sceptics as trivialising the concept of consciousness and reverting to a prescientific view of the world. But for those who wonder and worry about whether we have inadvertently created conscious systems, ConsScale has a more significant failing: it presupposes that the answer is yes. Consciousness tests in general suffer from a problem of circularity. Any test becomes interesting only if it surprises us, but if it surprises us, do we trust it?

Suppose a test finds that a thermostat is conscious. If you’re inclined to think a thermostat is conscious, you will feel vindicated. If sentient thermostats strike you as silly, you will reject the verdict. In that case, why bother conducting the test at all?

What we really need is a consciousness detector that starts from first principles, so it doesn’t presuppose the outcome. At the moment, the approach that stirs the most souls is Integrated Information Theory, or IIT, conceived by the neuroscientist Giulio Tononi at the University of Wisconsin. As with many other accounts of consciousness, IIT’s starting point is that conscious experience is a unity, incapable of being subdivided. Consciousness is a cohesive experience that fuses memories and sensations into a consistent, linear narrative of the world.

IIT does not presume what a conscious system should look like. It could be a cerebrum, a circuit, or a black interplanetary cloud. It just has to consist of some type of units equivalent to neurons: on-off devices that are linked together into a complex network. A master clock governs the network. At each tick, each device switches on or off depending on the status of other devices. The agnostic attitude to composition is one of IIT’s great strengths. ‘What I like about it is that it doesn’t prejudice the issue from the outset by defining consciousness to be a biological condition,’ says Chrisley.

But what people really like about IIT is that it is quantitative. It spells out an algorithm for calculating the degree of network interconnectedness, or Φ, defined as the amount of information that is not localised in the individual parts but is spread out over the entire network. The theory associates this quantity with the degree of consciousness. Tononi provides an online tool for doing the Φ calculation. You map out the putative conscious system by drawing a graph akin to an electronic wiring diagram. In principle, you could enter any kind of stick-figure diagram, from the control algorithm of a robotic vacuum cleaner to the full connectome (map of neural linkages) of the human brain. The software will generate a table of all possible state transitions, which tells you the system’s stream of consciousness: which conscious state will give way to which other conscious state.

A network might hum with activity at diverse rates, only one of which will qualify as the speed of thought

From there, the software goes through all the possible ways the network can be cleft in two. It calculates how tightly linked the two sections are by mathematically rattling one side to see how the other responds. Among all those ways of divvying up the system, one will respond the least to being rattled. On the principle that any chain is only as strong as its weakest link, this minimum effect gives you a measure of how thoroughly interlinked the system is, and that quantity is Φ. The value of Φ is low in a fragmented network (which has only limited connections) or in a fully integrated network that behaves as a single block (which is so tightly connected that it has no flexibility). The value of Φ is high in a hierarchical network composed of independent clusters that interact – the human brain being a prime example.

Any network with a non-zero value of Φ has the potential to be conscious; there is no sharp threshold between catatonic and cognizant, which accords with the range of human experience. But IIT does have a one-mind-at-a-time rule. Any given neuron can participate in only one conscious entity at a time, so a hierarchical system can be conscious on only one level; other levels are merely epiphenomenal. For instance, the human cerebral cortex is conscious, but not the individual brain regions that make it up. To ascertain which level deserves to be called conscious, the software goes through all possible subsets of the network and calculates which of them has the highest value of Φ.

Tononi’s algorithm also looks across time, since a network might hum with activity at diverse rates, only one of which will qualify as the speed of thought. This, too, accords with our human reality. Your mind directly perceives changes occurring over a second or so, but is oblivious to neuronal pulses that last milliseconds and only dimly apprehends the longer arc of life. A conventional digital computer, on the other hand, peaks at the hardware level, Tononi says. Its thoughts are a sequence of low-level instructions—‘let a=b’, ‘if/then’, ‘goto’ – rather than the higher level of abstraction that characterises human cognition, and it has more such thoughts in a second than you do in a lifetime. It might be able to thrash you at chess, but Tononi would quickly smoke it out as not being aware that it’s playing.

For the purpose of building a useful consciousness detector, IIT faces a couple of practical hurdles. For one, critics worry, it gives false positives. The MIT computer scientist Scott Aaronson offers the example of common computer error-correcting codes, which repair data that have been corrupted by hardware glitches or transmission noise. Such codes work by redundancy, so that if one bit of data is lost, the remaining bits can fill in for it. To get the most redundancy, all the bits of data must be interdependent—which is to say, they will have a high value of Φ. But few would ever accuse those codes of being conscious.

Also, calculating Φ is taxing. The software has to consider all the possible ways to divide up a system, a huge number of permutations. You could waste a lifetime just trying to gauge the consciousness of your goldfish. Most discussions of IIT therefore do not consider hard values of Φ; rather, they appeal to the theory’s general interpretation of interconnectedness. For now, it’s really a qualitative theory masquerading as a quantitative one, although that might change with short-cuts for estimating Φ, which Tegmark has been developing.

Fortunately, the qualitative is enough to get started on the consciousness detector. Tononi and his colleagues have already built such a device for human beings. It consists of a magnetic coil that stimulates an area of the brain (using a technique called transcranial magnetic stimulation) and EEG electrodes placed all around the scalp to measure brain waves. A magnetic pulse acts like a clapper striking a bell. An awake person’s brain reverberates, indicating a highly interconnected neural network. In deeply sleeping, anaesthetised, or vegetative people, the response is localised and muted. The technique can therefore tell you whether an unresponsive patient is locked in – physically unresponsive yet still conscious – or truly gone. So far, Tononi says his team has tried the experiment on 200 patients, with encouraging results.

That’s just the sort of contraption we need for testing potentially intelligent machines. Another, broadly similar, idea is to listen for inner speech, a little voice in the robot’s head. Haikonen’s XCR-1 robot, for example, keeps up a running internal monologue that mimics the self-conscious voice we hear inside our heads. XCR-1 has an inner voice because its various sensor modalities are all interconnected. If the visual subsystem sees a ball, the auditory subsystem hears the word ‘ball’ even if no one spoke it – almost a sort of synaesthesia. When Haikonen hooks up the auditory module to a speech engine, the robot babbles on like a garrulous grandparent.

The idea of information integration also allows researchers to be more rigorous in how they apply the standard tests of consciousness, such as those on which ConsScale is based. All those tests still face what you might call the zombie problem. How do you know your uncle, let alone your computer, isn’t a pod person – a zombie in the philosophical sense, going through the motions but lacking an internal life? He could look, act, and talk like your uncle, but have no experience of being your uncle. None of us can ever enter another mind, so we can never really know whether anyone’s home.

Zombies are at a disadvantage in complex physical and social environments, so natural selection will prefer living minds

IIT translates the conceptual zombie problem into a specific question of measurement: can a mindless system (low Φ) pass itself off as a conscious one (having high Φ)? The disappointing initial answer is yes. A high-Φ system has feedback loops, which connect the output back to the input – making the system aware of what it is doing. But even though a low-Φ system lacks feedback, it can still do anything a feedback system can, so you can’t tell the difference based solely on outward behaviour.

Yet you can often infer what’s happening on the inside from the constraints a system is operating under. For instance, the kind of internal feedback associated with higher-level cognition is more efficient at producing complex behaviours. It monitors the effect it produces and can fine-tune its actions in response. If you step on the gas and feel your car accelerate faster than you intended, you ease off. If you couldn’t make that adjustment, you’d have to get the action exactly right the first time. A system without feedback therefore has to build in an enormous decision tree with every possible condition spelled out in advance. ‘In order to be functionally equivalent to a system that has feedback, you need many more units and connections,’ Tononi says. Given limited resources, a zombie system will be much less capable than its feedback-endowed equivalent.

‘Zombies are not able to deploy the same behaviours we are able to do,’ says Arrabales. ‘They are like the zombies in the movies. They’re so stupid, because they don’t have our conscious mind.’ That puts zombies at a disadvantage in complex physical and social environments, so natural selection will prefer living minds. The same should be true for any AI that arises through adaptive techniques that mimic evolution. Now we’re getting somewhere. If we encounter a machine that can do what we can, and that must operate under the same bodily constraints that we do, the most parsimonious explanation will be that it is indeed conscious in every sense that we are conscious.

A test that applies only to consciousness among individual, corporeal entities is not exactly the kind of universal detector we are hoping for, however. What about group minds? Could the internet be conscious? What about biological groups, such as a nation or even Gaia – the biosphere as a whole? Groups have many of the essential qualities of conscious systems. They can act with purpose and be ‘aware’ of information. Last year, the philosopher Eric Schwitzgebel, of the University of California at Riverside, suggested that they also have full-blown phenomenal consciousness, citing IIT in defence of that view. After all, many organisations have an integrated, hierarchical structure reminiscent of the brain’s, so their Φ should be significant.

Tononi disagrees, noting that his one-mind-at-a-time rule excludes it. If individuals are conscious, a group of them cannot be; everything the group does reflects the will of the individuals in it, so no sense of self could ever take hold at the collective level. But what’s wrong with supposing that causation can occur on more than one level of description? Schwitzgebel offers a compelling thought-experiment. Imagine you replaced every neuron in your brain with a conscious bug, which has its own inner subjective experience but behaves outwardly just like a neuron. Would those critters usurp your consciousness and leave you an empty shell of a person? How could they possibly do that if nothing measurable about your brain activity has changed? It seems more straightforward to assume that the critters are conscious and so are you, in which case consciousness can emerge at multiple levels. How to identify and measure consciousness at the group level, let alone communicate with such entities, is a truly open problem.

Tackling those big problems is important, however. Building a consciousness detector is not just an intellectually fascinating idea. It is morally urgent – not so much because of what these systems could do to us, but what we could do to them. Dumb robots are plenty dangerous already, so conscious ones needn’t pose a special threat. To the contrary, they are just as likely to put us to shame by displaying higher forms of morality. And for want of recognising what we have brought into the world, we could be guilty of what Bostrom calls ‘mind crime’ – the creation of sentient beings for virtual enslavement. In fact, Schwitzgebel argues that we have greater responsibility to intelligent machines than to our fellow human beings, in the way that the parent bears a special responsibility to the child.

We are already encountering systems that act as if they were conscious. Our reaction to them depends on whether we think they really are, so tools such as Integrated Information Theory will be our ethical lamplights. Tononi says: ‘The majority of people these days would still say, “Oh, no, no, it’s just a machine”, but they have just the wrong notion of a machine. They are still stuck with cold things sitting on the table or doing clunky things. They are not yet prepared for a machine that can really fool you. When that happens – and it shows emotion in a way that makes you cry and quotes poetry and this and that – I think there will be a gigantic switch. Everybody is going to say, “For God’s sake, how can we turn that thing off?”’