When Alan Turing turned his attention to artificial intelligence, there was probably no one in the world better equipped for the task. His paper ‘Computing Machinery and Intelligence’ (1950) is still one of the most frequently cited in the field. Turing died young, however, and for a long time most of his work remained either classified or otherwise inaccessible. So it is perhaps not surprising that there are important lessons left to learn from him, including about the philosophical foundations of AI.

Turing’s thinking on this topic was far ahead of everyone else’s, partly because he had discovered the fundamental principle of modern computing machinery – the stored-program design – as early as 1936 (a full 12 years before the first modern computer was actually engineered). Turing had only just (in 1934) completed a first degree in mathematics at King’s College, Cambridge, when his article ‘On Computable Numbers’ (1936) was published – one of the most important mathematical papers in history – in which he described an abstract digital computing machine, known today as a universal Turing machine.

Virtually all modern computers are modelled on Turing’s idea. However, he originally conceived these machines merely because he saw that a human engaged in the process of computing could be compared to one, in a way that was useful for mathematics. His aim was to define the subset of real numbers that are computable in principle, independently of time and space. For this reason, he needed his imaginary computing machine to be maximally powerful.

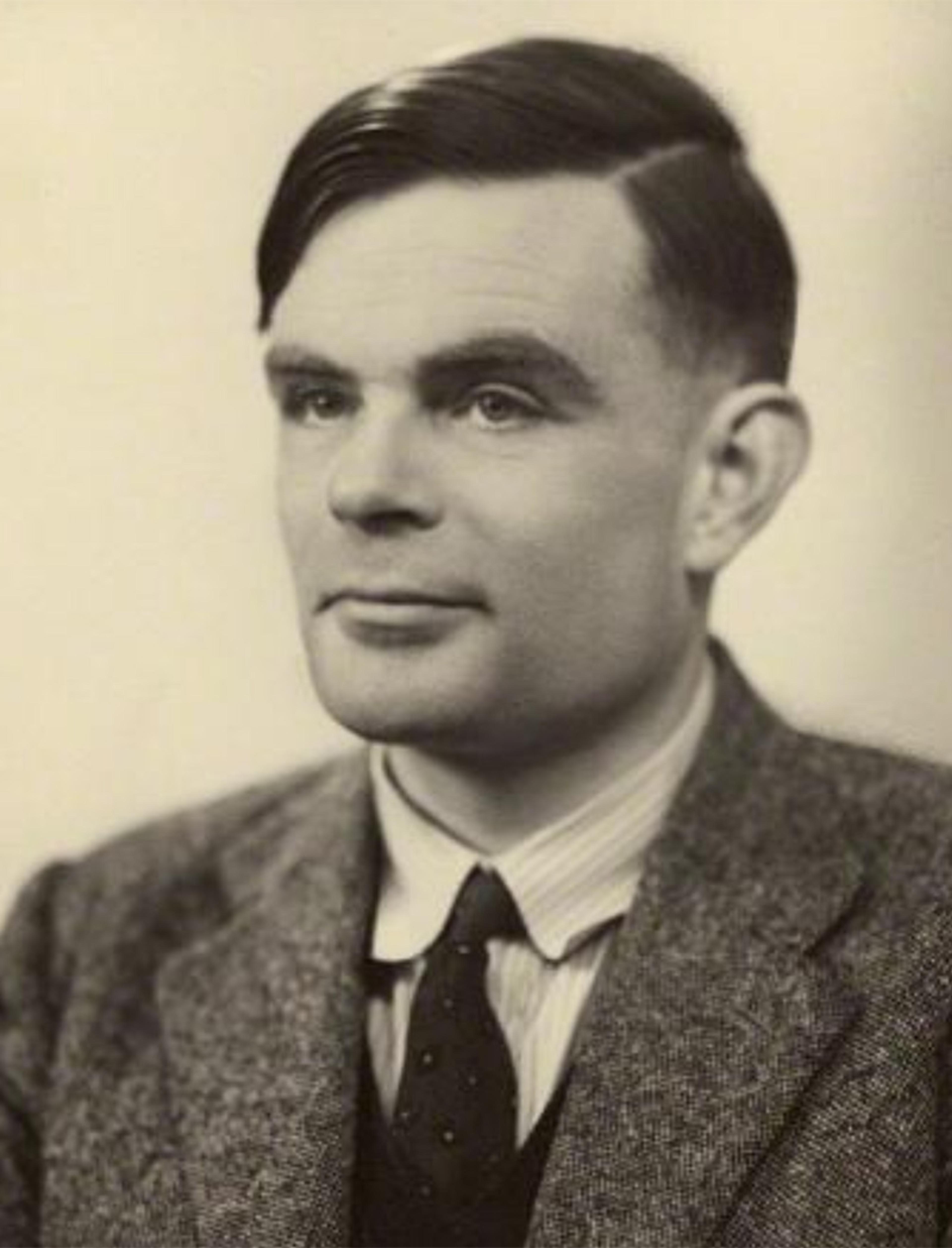

Alan Turing photographed by Elliott and Fry in 1951. Courtesy the National Portrait Gallery, London

To achieve this, he first imagined there being an infinite supply of tape (the storage medium of the imaginary machine). But most importantly, he discovered a method for setting the central mechanism of the machine, which had to be capable of being set in infinitely many different ways to do one thing or another in response to what it scans on the tape, in such a way as to be able to imitate any possible setting of the central mechanism. The essential ingredient of this method is the stored-program design: a universal Turing machine can imitate any other Turing machine, only because – as Turing noted – the basic programming of the central mechanism (ie the way the mechanism is set) can itself be stored on the tape, and hence can be modified (scanned, written, erased). Thus, Turing specified a type of machine that could compute any real number, and indeed anything whatsoever, that any machine that can scan, print and erase automatically according to a given set of instructions could possibly compute; moreover, to the extent that the basic analogy with a human in the process of computing holds, anything that a human could possibly compute.

It is important to understand that the stored-program design is not only the most fundamental principle of modern computing – it also already contains a deep insight into the limits of machine learning: namely, that there is nothing that such a machine can do in principle that it cannot in principle figure out for itself. Turing saw this implication and its practical potential very early on. And he soon became very interested in the question of machine learning, several years before the stored-program design was first implemented in an actual machine.

As Turing’s Cambridge teacher, life-long collaborator and fellow computer pioneer Max Newman wrote: ‘The description that he gave of a “universal” computing machine was entirely theoretical in purpose, but Turing’s strong interest in all kinds of practical experiment made him even then interested in the possibility of actually constructing a machine on these lines.’

During the Second World War, Turing learnt about advances in high-speed electronic switching (using vacuum tubes) and witnessed the birth of the first fully functioning electronic digital computer, Colossus, which was used by British cryptanalysts from early 1944. Colossus did not have its basic programming stored internally, however, and was generally far from being a universal – or, in modern terms, ‘general-purpose’ – computer. Rather, in order to make it perform any of a small number of different tasks, the machine first had to be programmed by hand using various plugs and switches. But in June 1945, just weeks after Germany had surrendered, Turing was hired by the UK’s National Physical Laboratory to lead the development of an electronic version of his universal computing machine. He completed a workable proposal before the end of the year, which represented the most complete specification yet of an electronic stored-program general-purpose digital computer, including exceptionally detailed descriptions of how the electronic hardware would need to be engineered. Within three years, Turing’s proposal led to the first operational modern computer.

According to his wartime colleagues, Turing had already given serious thought to the possibility of machine intelligence for some time before 1945, especially machine learning and heuristic search. In his 1945 proposal, he briefly notes: ‘There are indications … that it is possible to make the machine display intelligence at the risk of its making occasional serious mistakes.’ The following year, 1946, he devoted much of his energy to pioneering research on programming, which he rightly believed was key to future development. In February 1947, he gave a lecture to the London Mathematical Society, which was probably the first public scientific presentation on the subject of AI.

He began by telling his audience about universal Turing machines. He explained that what was being developed ‘may be regarded as practical versions of this same type of machine’, and that these machines can thus ‘be made to do any job that could be done by a human computer’. He further explained how these machines might be used for mathematical research in the future, as well as their likely impact on the nature and number of jobs for mathematicians. ‘This topic,’ he noted, ‘naturally leads to the question as to how far it is possible in principle for a computing machine to simulate human activities.’ He was quite explicit about which human activity would be most important to try to simulate. ‘What we want is a machine that can learn from experience,’ he said.

Turing was equally clear that this could be done, and how: ‘The possibility of letting the machine alter its own instructions provides the mechanism for this.’ In other words, the stored-program design makes it possible. ‘But,’ he added, ‘this of course does not get us very far.’ After all, programming was not even in its infancy then (terms such as ‘learning algorithm’ did not yet exist), not to mention the fact that the machine he was referring to (the modern computer) was only just being built.

All of his philosophical work had only the instrumental aim of conceptual clarity

A related problem that Turing was keen to address is purely philosophical in nature. This is the issue that probably occupied him the most for the rest of his life. And it is the same problem that gave rise to the most widely discussed concept from his philosophy of AI, what is now known as the Turing test. Seeing the evolution of Turing’s thinking in this connection, both before and after the formulation of his famous test, will help us avoid at least the crudest yet all-too-common misunderstandings of what Turing was trying to do.

‘It might be argued that there is a fundamental contradiction in the idea of a machine with intelligence,’ is how he began his final reflections in the lecture, which culminated in his ‘plea for “fair play for the machines”’. He illustrated what he had in mind with a little thought experiment, which may be regarded as an early precursor to the Turing test:

Let us suppose we have set up a machine with certain initial instruction tables [ie, programs], so constructed that these tables might on occasion, if good reason arose, modify those tables. One can imagine that after the machine had been operating for some time, the instructions would have altered out of all recognition, but nevertheless still be such that one would have to admit that the machine was still doing very worthwhile calculations. Possibly it might still be getting results of the type desired when the machine was first set up, but in a much more efficient manner.

Commenting on this case, he then added:

In such a case one would have to admit that the progress of the machine had not been foreseen when its original instructions were put in. It would be like a pupil who had learnt much from his master, but had added much more by his own work. When this happens I feel that one is obliged to regard the machine as showing intelligence.

Turing knew that whatever he, or others, might feel about such a case was less important than whether machine intelligence is really possible. But he also knew – as well as anyone – that conceptual clarity at the fundamental level, such as might be achieved through philosophical reflection, was going to be crucial to any major scientific advancement in the right direction. Arguably, all of his philosophical work had only this instrumental aim of conceptual clarity. It was certainly always characteristic of his work that the two – philosophy and science (or, more generally, fundamental science and applied science) – went hand in hand in this way. In the lecture, this is shown by his immediate continuation from the passage above: ‘As soon as one can provide a reasonably large memory capacity it should be possible to begin to experiment on these lines.’

Turing’s strong interest in practical experimentation was one reason why he consistently advocated for the development of high-speed, large-memory machines with a minimally complex hardware architecture (child machines, as he would later call them), so as to give the greatest possible freedom to programming, including a machine’s reprogramming itself (ie, machine learning). Thus, he explained:

I have spent a considerable time in this lecture on this question of memory, because I believe that the provision of proper storage is the key to the problem of the digital computer, and certainly if they are to be persuaded to show any sort of genuine intelligence.

In a letter from the same period, he wrote: ‘I am more interested in the possibility of producing models of the action of the brain than in the practical applications to computing.’ Due to his true scientific interests in the development of computing technology, Turing had quickly become frustrated by the ongoing engineering work at the National Physical Laboratory, which was not only slow due to poor organisation but also vastly less ambitious in terms of speed and storage capacity than he wanted it to be. In mid-1947, he requested a 12-month leave of absence. The laboratory’s director, Charles Darwin (grandson of the Charles Darwin), supported this, and the request was granted. In a letter from July that year, Darwin described Turing’s reasons as follows:

He wants to extend his work on the machine still further towards the biological side. I can best describe it by saying that hitherto the machine has been planned for work equivalent to that of the lower parts of the brain, and he wants to see how much a machine can do for the higher ones; for example, could a machine be made that could learn by experience?

The result of this research, which did indeed focus on the question of learning, was a groundbreaking typescript entitled ‘Intelligent Machinery’. The philosopher Jack Copeland, director of the Turing Archive for the History of Computing in New Zealand, has described this paper as the first manifesto of AI, and that seems accurate as far as our present historical knowledge goes. The final version was written in 1948. However, it was not appreciated at the laboratory, where Darwin reportedly derided it as a ‘schoolboy’s essay’ and decided it was not suitable for publication. It remained unpublished until 1968, and has subsequently received little attention.

‘The analogy with the human brain is used as a guiding principle’

Yet the paper anticipates many important ideas and techniques in both the logic-based and connectionist (neural networks, etc) approaches to AI. In particular, Turing gives a detailed description of an artificial neural network that can be trained using reinforcement learning (‘reward’ vs ‘punishment’ feedback, etc) and genetic algorithms. His own summary at the end of the paper gives a flavour of its groundbreaking character:

The possible ways in which machinery might be made to show intelligent behaviour are discussed. The analogy with the human brain is used as a guiding principle. It is pointed out that the potentialities of the human intelligence can only be realised if suitable education is provided. The investigation mainly centres round an analogous teaching process applied to machines. The idea of an unorganised machine is defined, and it is suggested that the infant human cortex is of this nature. Simple examples of such machines are given, and their education by means of rewards and punishments is discussed. In one case the education process is carried through …

Turing never returned to the National Physical Laboratory after his research leave. Instead, in May 1948, he joined his friend Newman’s Computing Machine Laboratory at the University of Manchester, where shortly afterwards the world’s first electronic stored-program general-purpose digital computer, the Small-Scale Experimental Machine (commonly known as the Manchester Baby), ran its first program.

Turing spent most of the remaining six years of his life continuing his research on AI. After completing the programming system of the expanded Manchester Mark I machine and the subsequent Ferranti Mark I, the world’s first commercially available modern computer (manufactured by Ferranti Ltd), in early 1951 Turing began experimenting on the Ferranti. The early results of his computational modelling of biological growth were published in the paper ‘The Chemical Basis of Morphogenesis’ (1952), which represented an important early contribution to research on artificial life.

Another paper he wrote describes a chess learning algorithm using genetic search, which may well have been what he had in mind in his 1945 proposal when he wrote that:

there are indications … that it is possible to make the machine display intelligence at the risk of its making occasional serious mistakes. By following up this aspect the machine could probably be made to play very good chess.

But, in particular, Turing continued his work on the philosophy of AI and actively attempted to advance academic and public discourse on the subject. For example, there survive minutes from an October 1949 philosophy seminar discussion between Turing, Newman, the neurosurgeon Geoffrey Jefferson and Michael Polanyi, who was then professor of social science at Manchester, on ‘the mind and the computing machine’. The next year saw the publication of Turing’s paper ‘Computing Machinery and Intelligence’. Moreover, he appeared on at least three BBC radio shows during the early 1950s. Although there are no known recordings, the scripts were published in 2004. The first is a brief lecture entitled ‘Intelligent Machinery, A Heretical Theory’, probably first broadcast in 1951, in which his stated objective is to question the commonly held belief ‘You cannot make a machine to think for you’ by explaining, and reflecting on, the technique of reinforcement learning.

The second is a short lecture on the question ‘Can Digital Computers Think?’, in which Turing briefly introduces the universality of stored-program computers, before advancing the following argument:

If any machine can appropriately be described as a brain, then any digital computer can be so described … If it is accepted that real brains … are a sort of machine it will follow that our digital computer, suitably programmed, will behave like a brain.

However, if this is what the process of ‘programming a machine to think’ requires, he points out, doing so will be like writing a treatise about family life on a distant planet that is merely known to exist (Turing’s example at the time was family life on Mars). ‘The fact is,’ he went on to explain, ‘that we know very little about it [how to program a machine to behave like a brain], and very little research has yet been done.’ He adds: ‘I will only say this, that I believe the process should bear a close relation of that of teaching.’

The imitation game has served its intended purpose; it has also been fundamentally misunderstood

The third and final programme Turing participated in (first broadcast in 1952) is a discussion with Newman and Jefferson, chaired by the Cambridge philosopher R B Braithwaite, on the question ‘Can Automatic Calculating Machines Be Said to Think?’ At the outset, the participants agree that there would be no point in trying to give a general definition of thinking. Turing then introduces a variation on the ‘imitation game’, or the Turing test. In his 1950 paper, he says that he is introducing the imitation game in order to replace the question he is considering – ‘Can machines think?’ – with one ‘which is closely related to it and is expressed in relatively unambiguous words’. The paper’s version of the game, which is slightly more sophisticated, consists of a human judge trying to determine which of two contestants is a human and which a machine, on the sole basis of remote communication using typewritten text messages, with the other human trying to help the judge while the machine pretends to be a human. Turing says that:

[T]he question, ‘Can machines think?’ should be replaced by ‘Are there imaginable digital computers which would do well in the imitation game?’

Although its fame alone is proof that the imitation game has served its intended purpose, it has also been fundamentally misunderstood. Indeed, the value of Turing’s work on AI has traditionally been reduced, by philosophers and computer scientists alike, to various distorted accounts of its purpose. For example, in an influential critique, the philosopher John Searle in 1980 complained that ‘the Turing test is typical of the tradition in being unashamedly behaviouristic’ (that is, it reduces psychology to the observation of outward behaviour), while the computer scientists Stuart Russell and Peter Norvig, authors of the world’s most widely used AI textbook, write in one chapter:

Few AI researchers pay attention to the Turing test, preferring to concentrate on their systems’ performance on practical tasks, rather than the ability to imitate humans.

But a fresh look at the 1950 paper shows that Turing’s aim clearly went beyond merely defining thinking (or intelligence) – contrary to the way in which philosophers such as Searle have tended to read him – or merely operationalising the concept, as computer scientists have often understood him. In particular, contra Searle and his ilk, Turing was clearly aware that a machine’s doing well in the imitation game is neither a necessary nor a sufficient criterion for thinking or intelligence. This is how he explains the similar test that he presents in the radio discussion:

You might call it a test to see whether the machine thinks, but it would be better to avoid begging the question, and say that the machines that pass are (let’s say) ‘Grade A’ machines … My suggestion is just that this is the question we should discuss. It’s not the same as ‘Do machines think,’ but it seems near enough for our present purpose, and raises much the same difficulties.

This passage, along with Turing’s other writings and public speeches on the philosophy of AI (including all those described above), has received little attention. However, taken together, these writings provide a clear picture of what his primary goal was in formulating the imitation game. For instance, they show that, from 1947 onwards (and perhaps earlier), in pursuit of the same general goal, Turing in fact proposed not one but many tests for comparing humans and machines. These tests concerned learning, thinking and intelligence, and could be applied to various smaller and bigger tasks, including simple problem-solving, games such as chess and Go, as well as general conversation. But his primary goal was never merely to define or operationalise any of these things. Rather, it was always more fundamental and progressive in nature: namely, to prepare the conceptual ground, carefully and rigorously in the manner of the mathematical philosopher that he was, on which future computing technology could be successfully conceived, first by scientists and engineers, and later by policymakers and society at large.

It is widely overlooked that perhaps the most important forerunner of the imitation game is found in the short final section of Turing’s long-unpublished AI research paper of 1948, under the heading ‘Intelligence as an Emotional Concept’. This section makes it quite obvious that the central purpose of introducing a test such as the imitation game is to clear away misunderstandings that our ordinary concepts and the ordinary use we make of them are otherwise likely to produce. As Turing explains:

The extent to which we regard something as behaving in an intelligent manner is determined as much by our own state of mind and training as by the properties of the object under consideration. If we are able to explain and predict its behaviour or if there seems to be little underlying plan, we have little temptation to imagine intelligence.

We want our scientific judgment as to whether something is intelligent or not to be objective, at least to the extent that our judgment will not depend on our own state of mind; for instance, on whether we are able to explain the relevant behaviour or whether we perhaps fear the possibility of intelligence in a given case. For this reason – as he also explained in each of the three radio broadcasts and in his 1950 paper – Turing proposed ways of eliminating the emotional components of our ordinary concepts. In the 1948 paper, he wrote:

It is possible to do a little experiment on these lines, even at the present stage of knowledge. It is not difficult to devise a paper machine [ie, a program written on paper] which will play a not very bad game of chess. Now get three men as subjects for the experiment A, B, C. A and C are to be rather poor chess players, B is the operator who works the paper machine. (In order that he should be able to work it fairly fast it is advisable that he be both mathematician and chess player.) Two rooms are used with some arrangement for communicating moves, and a game is played between C and either A or the paper machine. C may find it quite difficult to tell which he is playing.

It is true that, in addition to his conceptual work, Turing advanced numerous philosophical arguments to defend the possibility of machine intelligence, anticipating – and, arguably, refuting – all of the most influential objections (from the Lucas-Penrose argument to Hubert Dreyfus to consciousness). But that is markedly different from providing metaphysical arguments in favour of the existence of machine intelligence, which Turing emphatically refused to do.

‘If a machine can think, it might think more intelligently than we do’

There is no reason to believe Turing was not being serious when, in his 1950 paper, he said:

The reader will have anticipated that I have no very convincing arguments of a positive nature to support my views. If I had I should not have taken such pains to point out the fallacies in contrary views.

But he was always careful enough to express his own views not in terms of our ordinary concepts – eg, whether machines can ‘think’ – but strictly in terms of conjectures about when machines could be expected to perform at the human level on a (more or less) objectively measurable task (such as the imitation game). At the same time, he most certainly disagreed with the view, expressed by the computer scientist Edsger Dijkstra in 1984 and still popular among AI researchers today, that ‘the question of whether Machines Can Think … is about as relevant as the question of whether Submarines Can Swim’. On the contrary, Turing was fully aware of the cultural, political and scientific importance of this kind of question. For example, in one radio broadcast, ‘Can Digital Computers Think?’, he ends by asking:

If a machine can think, it might think more intelligently than we do, and then where should we be? Even if we could keep the machines in a subservient position, for instance by turning off the power at strategic moments, we should, as a species, feel greatly humbled. … This new danger … if it comes at all … is remote but not astronomically remote, and is certainly something which can give us anxiety. It is customary, in a talk or article on this subject, to offer a grain of comfort, in the form of a statement that some particularly human characteristic could never be imitated by a machine. It might for instance be said that no machine could write good English, or that it could not be influenced by sex-appeal or smoke a pipe. I cannot offer any such comfort, for I believe that no such bounds can be set.

Lastly, he points out the importance of the question for the study of human cognition:

The whole thinking process is still rather mysterious to us, but I believe that the attempt to make a thinking machine will help us greatly in finding out how we think ourselves.

Today, we can confidently say that he was right; the attempt to make a thinking machine certainly has helped us in this way. Moreover, he also correctly predicted in his 1950 paper that ‘at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted’. He did not mean, of course, that the problem of minds and machines would have been solved. In fact, the problem has become only more urgent. Ongoing advances in affective computing and bioengineering will move more and more people to believe that not only can machines think, they can also feel, perhaps deserve certain legal rights, etc. Yet others (such as Roger Penrose) may still reasonably deny that computers can even compute.

It was Turing’s fundamental conceptual work in combination with his practical, experimental approach that allowed him not only to conceive the fundamental principle of modern computing in 1935-36 but also, in 1947-48, to anticipate what are now, more than 70 years later, some of the most successful theoretical approaches in the field of AI and machine learning. Arguably, it was this combination that allowed him to progress from a schoolboy whose work in mathematics was judged promising yet untidy, and whose ideas were considered ‘vague’ and ‘grandiose’ by his teachers, to being one of the most innovative minds of the 20th century.