At the end of his life, the English naturalist Charles Darwin became intrigued by the musicality of worms. In the last book he ever wrote, in 1881, he describes a series of experiments on his vermicular subjects. Worms, Darwin discovered, are sensitive to vibrations when transmitted through a solid surface, but tone-deaf and unresponsive to the shriek of a whistle or the bellow of a bassoon.

Earlier, in the 1760s, the French natural philosopher Comte de Buffon heated up balls of iron and other minerals until they were white-hot. Then, by sense of touch alone, he recorded how long it took them to cool to room temperature.

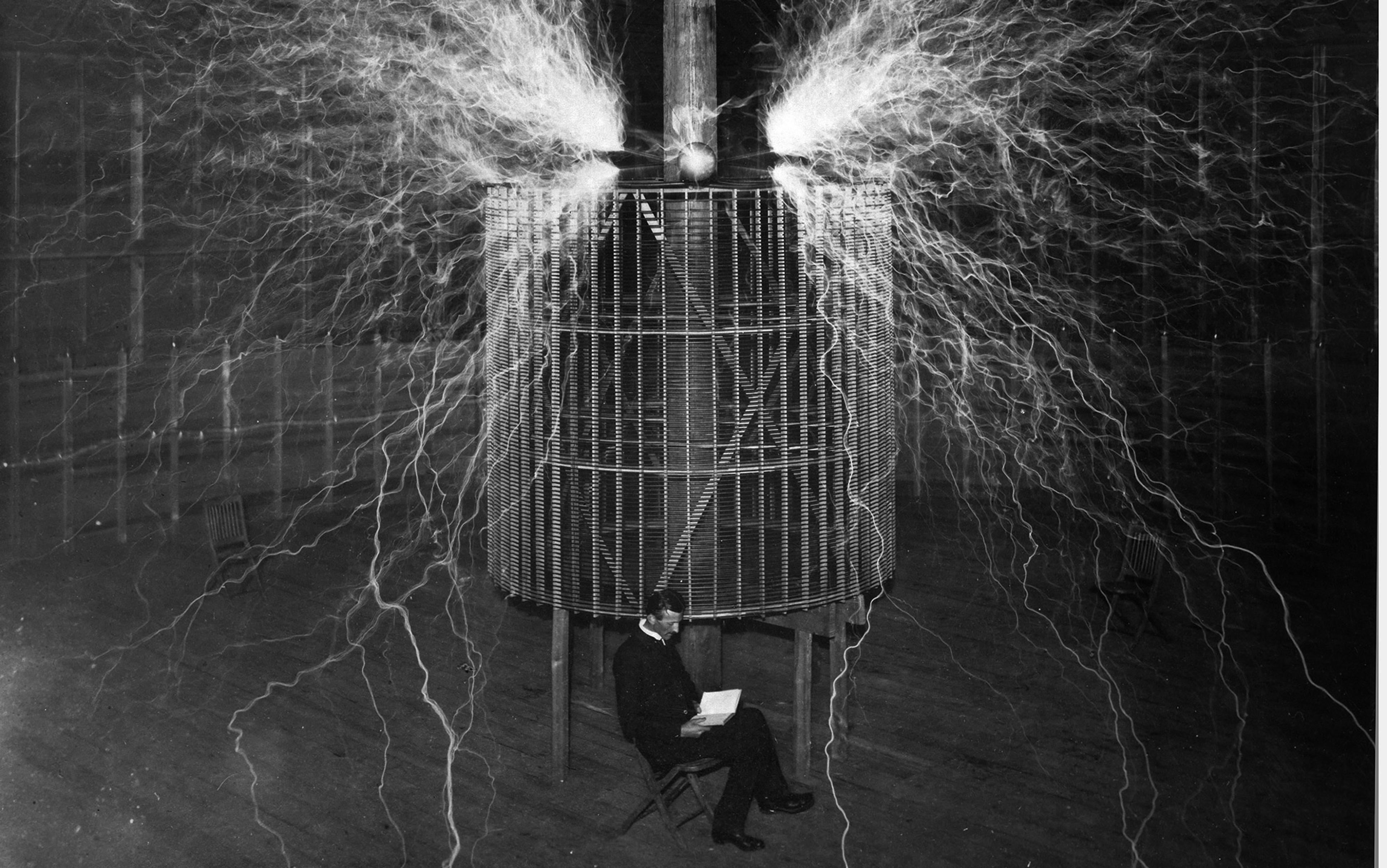

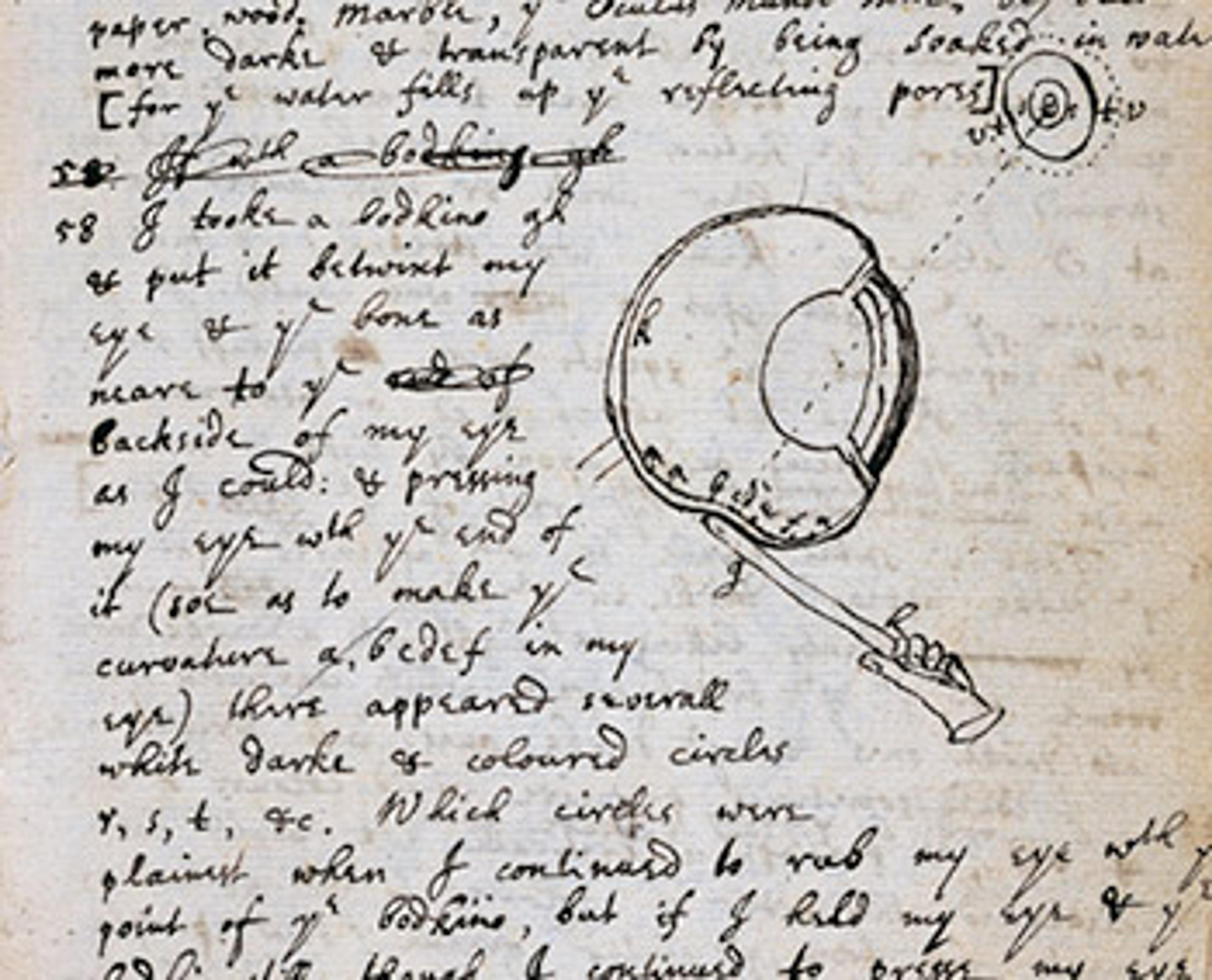

A hundred years before that, Isaac Newton wrote about the time he slid a bodkin – a kind of thick tailor’s needle – between his skull and his eye, and rubbed the needle so as to distort the shape of his own eyeball.

‘An Experiment to Put Pressure on the Eye’, from Isaac Newton’s notebooks (1665-6). Photo courtesy Cambridge University Library

These experiments are all pretty wacky, but they still bear the mark of the scientific. Each one involves the careful recording and assessment of data. Darwin was excluding the hypothesis that hearing explained earthworm behaviour; Buffon extrapolated the age of the Earth from a wide range of geological materials (his estimate: 75,000 years); and Newton’s unpleasant self-surgery helped to develop his theory of optics, by clarifying the relationship between the eye’s geometry and the resulting visual effects. Their methods might have been unorthodox, but they were following their intellectual instincts about what the enquiry demanded. They had licence to be scientific mavericks.

The word ‘maverick’ has a surprising history. In the 1860s, a Texan lawyer and rancher stopped branding his cattle. Of course, the unbranded livestock quickly became identified as his. The man’s name? Samuel Maverick. By the 1880s, through one of those strange transformations so distinctive of language, the term had come to mean anyone who refuses to abide by the rules. I like the connection with cattle: where most cows and steers follow the herd, some – the unbranded – find their own path.

Nowadays scientists tend to shun the ‘maverick’ label. If you’ve hung out in a lab lately, you’ll notice that scientific researchers are often terrible gossips. Being labelled a ‘maverick’, a ‘crank’ or a ‘little bit crazy’ can be career-killing. The result is what the philosopher Huw Price at the University of Cambridge calls ‘reputation traps’: if an area of study gets a bad smell, a waft of the illegitimate, serious scientists won’t go anywhere near it.

Mavericks such as Newton, Buffon and Darwin operated in a very different time to our own. Theirs was the age of the ‘gentleman scholar’, in which research was pursued by a moneyed class with time to kill. Today, though, modern science encourages conformity. For a start, you need to get a degree to become a scientist of some stripe. You also need to publish, get peer-reviewed, obtain money from a funder, and find a job. These things all mould the young scientist: you aren’t just taught proper pipette technique, but also take on a kind of disciplinary worldview. The process of acculturation is part of what the philosopher and historian Thomas Kuhn called a ‘paradigm’, a set of values, practices and basic concepts that scientists hold in common.

On top of this standardisation, careers in science are now extremely hard to come by. There’s a scarcity of jobs compared with the number of applicants, and very few high-ranking and ‘big impact’ journals. This means that the research decisions that scientists make, particularly early on, are high-risk wagers about what will be fruitful and lead to a decent career. The road to academic stardom (and, for that matter, academic mediocrity) is littered with brilliant, passionate people who simply made bad bets. In such an environment, researchers are bound to be conservative – with the stakes set so high, taking a punt on something outlandish, and that you know is likely to hurt your career, is not a winning move.

Of course, all these filters help to ensure that the science we read about is well-supported and reliable, compared with Darwin’s day. There’s much good in sharing a paradigm; it makes communication easier and helps knowledge accumulate from a common base. But professional training also involves learning how to convince colleagues in your field that your work is legitimate, that it meets their ideas of what the good questions are and what good answers look like. This makes science more productive, but less creative. Enquiries can become hidebound and unadventurous. As a result, truly revolutionary research – the domain of the maverick – is increasingly hard to pursue.

‘One must await the right time for conceptual change,’ McClintock wrote in a shoulder-shrugging letter

The biologist Barbara McClintock devoted enormous effort and paid a very high price for her path-breaking research into so-called ‘jumping genes’ in the mid-20th century. She was certainly no scientific outsider – by the 1940s, she was widely recognised for her foundational work on heredity. She took up several fellowships at Cornell University, worked at Stanford University, and had a permanent research position at Cold Springs Laboratory, with membership at the National Academy of Sciences to boot. But things changed when she became interested in how genes were controlled – that is, how identical genetic sequences could express themselves differently at various stages of growth and in different parts of the same living structure.

It was widely believed that a cell’s chromosomes consisted of a fixed line-up of genes, which were like a static blueprint or map for the organism. What McClintock found, instead, was that the genes in any particular cell could move around on the chromosome, as well as turn themselves on and off in response to environmental factors, other genes, and bits and pieces of the developmental soup. The response from the scientific community was initially hostile – not to the basic phenomenon McClintock had discovered, but to the complex, interwoven picture of biological systems that she developed on the back of the discovery. For 20 years, McClintock was forced to switch gears completely and work on the study of maize. It wasn’t until the 1970s that her peers came around, and she was duly awarded the Nobel Prize in 1983. ‘One must await the right time for conceptual change,’ she wrote in a shoulder-shrugging letter to a fellow geneticist in 1973.

Even from a position of scientific respectability, ‘maverick’ thinking such as McClintock’s can involve decades in the academic hinterlands. Modern science might be a high-output activity, but it seems to lack an appreciation for the freewheeling inventiveness of its forebearers. Surely, then, that’s why we need to cherish and preserve these mavericks, to make space for the visionary who is willing to swim against the tide, for the unappreciated genius who will break rank? Mavericks are outsiders by definition, and if science wants to challenge its own orthodoxies, it needs outsiders. Or so the story goes.

Perhaps this tale already contains the germ of a solution: we should recover the ‘gentleman (and gentlewoman) scholar’, in the form of the modern-day entrepreneur, venture capitalist or Silicon Valley billionaire. In July 2012, a Californian businessman called Russ George, in collaboration with the Old Massett Village of the Haida Nation, arranged for 100 tonnes of iron sulphate to be dumped into the Pacific Ocean off the west coast of Canada. It was a massive experiment with a geoengineering technique called ocean fertilisation. The idea was to give a huge boost to the local phytoplankton, those tiny microorganisms that teem all over the Earth’s waters and need iron to synthesise sunlight. They would then act as a food source to help replenish local fish supplies, and also to create a carbon sink as the phytoplankton died and settled on the ocean floor. If this trial was successful, perhaps ocean fertilisation could be used on a wider scale to help with food stocks and reduce atmospheric carbon.

The outcry was immediate. The International Maritime Organization said the experiment hadn’t met the necessary guidelines. The Canadian government filed a search warrant against George, seized his data, and suggested he had violated various UN moratoria. George defended his actions in the press and said he was suffering ‘under this dark cloud of vilification’ for ‘daring to go where none have gone before’. He claimed that, while the scientific establishment only talked about the catastrophic risks of climate change, he was the one taking action. George took on the mantle of the maverick with pride.

What’s the lesson of this story? As we’ve said, science needs maverick thinking, but it’s very bad at accommodating it. So shouldn’t we just leave it to those with the will, bravery and ingenuity – as well as the hubris, privilege and cash – to simply get on with the job outside of those conservative institutions? Surely ambitious leaders backed by well-funded private companies can step in and lead the charge on ‘moonshot’ thinking. After all, who better to steer technological and scientific development towards a brighter future than the inventors and owners of those same innovations? Can we put our faith in the Russ Georges of this world?

Well, to put it bluntly – no.

One reason for skepticism boils down to the basic architecture of the maverick story. The maverick is an archetype from a narrative of human progress in which specific individuals – iconoclasts, geniuses – are what shapes history’s trajectory. Yes, there’s something very satisfying about the tale of the solitary battler going it alone against the ignorant mainstream, and finally triumphing over orthodox opinion. That’s a version of the Great Man view of history (and, McClintock notwithstanding, such stories in science are almost always about men). But are these narratives accurate? How can we be sure that they’re true stories, as opposed to merely good ones?

To be a successful maverick, likely you’re pretty privileged. You need a sense of entitlement to take on the mainstream

In fact, economic, environmental and socio-political factors – rather than unique individuals – are often better at explaining the origin of scientific innovations. Take the venerated maverick Galileo, who played an important role in overturning Ptolemaic astronomy. In addition to his astronomical and mathematical prowess, we might also point to major advances in lens-grinding, which produced much better telescopes; to the spread of printing, which enabled Galileo to propagate his ideas (thanks to his undoubted genius for propaganda); and to the political and religious context of reformation and counter-reformation Europe, which led to a much more diverse and open intellectual climate (even if Galileo ultimately ran up against the Catholic Church). On these accounts, the players who act out history’s drama become much less relevant. Instead, we should pay much more attention to the historical backdrop – and we shouldn’t be so quick to think that the solution to our problem is a few good mavericks.

There’s also a worry about the kind of mavericks we’re listening to. Most of those floating around today are wealthy, white and male. That’s not surprising: to be a successful maverick, chances are you’re already pretty privileged. Why? Well, you need a sense of entitlement and the confidence to take on the mainstream. You need the emotional and physical support to enable risk-taking. You need credentials so people will pay attention to you. Realistically, only a small minority of people will be lucky enough to acquire all these things, and those people are likely to be drawn from a pretty narrow band of society. From this perspective, it’s no surprise that the folk who’ve garnered most of the accolades for their individual contributions to science have been gentlemen scholars.

The upshot is that mavericks aren’t diverse. That’s not only unfair as a matter of justice – it’s also dangerously limiting if you assess science on its own terms. Most obviously, it gives you a shallower pool of potential researchers. Any structural features that block people from thinking independently, and that have nothing to do with how good they are as scientists, constrain our scientific investigations. Homogeneity produces suboptimal science. This is not just a matter of the quantity of research, but also its breadth and quality. It seems plain to me that your background, experiences and personal history affect the kinds of ideas you have – and so if we want a diversity of ideas, we need a diversity of people.

A classic example comes from palaeoanthropology, the study of human evolution. In the 1960s, the male-dominated field was fixated on a particular narrative about what drove human evolution, a narrative exemplified by the ‘Man the Hunter’ conference at the University of Chicago in 1966. Human evolution was about hunting: a meat-based diet gave us the energy to increase brain size, the needs of cooperation and cognitive acumen required by the hunt drove brain-size increases, and so forth. And indeed, the presumed masculine ‘hunting’ side of the equation was firmly emphasised over the presumed feminine ‘gathering’ side.

As women entered the field, however, they poked holes in this picture. They suggested that hunting perhaps wasn’t so central to human subsistence – at best, the occasional bounty of meat from big game was a supplement to the steady fare provided by gathering. Further, emphasis shifted to the needs of childbirth: cooperation could be explained by the fact that the longer childhood that goes along with increased brain size required collective child-raising, provisioning and protection. The anthropologist Kristen Hawkes at the University of Utah put forward the grandmother hypothesis, which noted the near-unique long post-menopausal life of human women, and suggested that non-breeding women – grandmas – played a crucial role in maintaining well-functioning social groups. Theoretically, it would have been possible for men to come up with these ideas. And it’s true that new technologies and finds also played their part in diversifying our vision of human evolution. But the experiences and perspectives of women definitely helped these new theories to flourish.

Inevitably, if it falls to a small set of people to shape how science and technology develops, we’re likely to make decisions that protect the interests of that narrow group. Which brings me to a final criticism: trusting in anti-establishment mavericks makes us very vulnerable to their idiosyncrasies. We have very little control over the whims of our heroes (male or female); they could very well pick the best, most important and carefully thought-out application of their time and money. Or they might not. It is their time and money, after all. They’re not ultimately accountable to the people they affect. No matter how benevolent the intentions, if wealthy white men are the only people who can be mavericks, that’s bad news for the underprivileged.

The international aid and development sector is regrettably full of sobering examples of what can happen when the gap between ‘helper’ and ‘helped’ is too wide; the ‘voluntourism’ industry, which sends students and travellers from wealthy nations to help out with development projects abroad, has been roundly criticised for being inefficient and even exploitative. Good intentions without the right knowledge and perspective often do more harm than good. It seems to me that what goes for aid likely goes for technology and science, too. Without a range of people from different backgrounds, these fields will develop in ways that entrench existing inequality, inadvertently or not.

Paradoxically, then, I don’t think the solution to the lack of creative thinking is to look for more mavericks. Sure, we want people who battle received opinion, who resist old truths. But instead of a maverick signalling someone with the grit to swim against the scientific tide, perhaps being speculative and risky should simply be more central to science itself. It should be considered as going with the tide.

That’s not easy. Remember what we said about gate-keeping, peer review and betting on different avenues of research. These all play important roles in maintaining scientific authority, and can be enormously productive. But they’re productive only in certain contexts. I’m not suggesting that we give up these things entirely; rather, what we need to do is to relinquish the idea that there’s one single good way to organise scientific communities.

One area that’s in dire need of a revamp – and that’s rife with accusations of crankery – is a field that’s dear to my heart: the study of the existential risk from emerging technologies and scientific innovations. Think rogue artificial intelligence, deadly superbugs, geoengineering gone wrong, an asteroid colliding with Earth. These perils to humanity fall into what the legal scholar Jonathan B Wiener at Duke University in North Carolina calls the ‘tragedy of the uncommons’: we’re all remarkably bad at thinking about large-scale, low-probability, high-impact events.

But think we must. Even if the chances of extinction-level events are minimal, if there are actions we can take now to reduce the likelihood of their occurrence, we should. And this requires a science to help address those threats.

Because of the inherent conservatism of science, however, such speculative research programmes are often seen as doomsday propheteering. Most respectable researchers won’t even get close for fear of falling into a reputation trap. This potentially stops scientists from considering some of the more unlikely – but possible and high-consequence – upshots of their own research. Admittedly, the chances of civilisation-squashing events are hard to calculate or even express, and perceptions and predictions differ wildly. But again, just because existential risk is hard to study, that doesn’t mean we shouldn’t try. It’s surely worth devoting even just a little of our energy to mapping out safer futures for our species and our planet.

Instead of scientists thinking about the same problems in the same ways, spread them out across the whole landscape of research

The diversity question looms particularly large here. Just because existential risks are everyone’s risks, it doesn’t mean that underprivileged groups count for any less. Consider the possibility of geoengineering as a means of preventing catastrophic climate change. Lower-lying, and usually poorer, countries have been the first to experience the impact of changing weather patterns and rising sea levels, so the perceived urgency of taking action is unevenly distributed. But to be effective, geoengineering will need to be wide-ranging, and probably very dangerous – so who gets to decide which risks we ought to run? When is the threat of climate change sufficient to take the chance on an unpredictable new technology? Answering these questions is well above my pay grade, but if the people making these choices are drawn from a particular – and privileged – bit of society, the results are unlikely to be good for everyone else.

In areas of science where we’re pretty confident about having unearthed the most fruitful lines of enquiry, focusing our research efforts is less problematic. But in existential risk, we don’t even know what the most useful questions are. When the stakes are so high, and the field is so daunting and unclear, that’s precisely the moment to get creative. Instead of having platoons of scientists thinking about the same kind of problem in the same kind of way, now is when we should be spreading them out across the whole landscape of research. When we don’t know what’s good and what’s bad, putting too many eggs into a small number of baskets is a bad idea.

How science works now isn’t necessarily how science will always work, or how it should work. The rules aren’t handed down in stone from the mountain top; rather, they’re painfully and slowly built through decades of hard work, hard thought, and more than a little happenstance, power-play and other features typical of any human endeavour. Scientists are human, and as such they’ll respond to incentives. If we want a less conservative science, then, we should ‘tweak’ the system by changing the allocation of incentives.

For example, we might bring lotteries into science funding to boost the number of risky research bets. If referees have less power over who gets funded, those looking for money won’t have to work so hard to please those referees. We might also set up more institutions – well-supported, well-funded and hopefully high-status – to encourage ‘exploratory’ research. Perhaps we also need more recognition of the fact that scientific success and significance can’t be summed up in a single measure.

These ideas could be reflected in the standards of journals, reviewers, funding bodies and hiring committees. Scientific findings could still be required to meet a standard of significance, but we should expand what that looks like: from the ‘probably wrong but probably productive’, to the ‘likely right but only in specific circumstances’, to the ‘imaginative and the opening up new areas of research’, and so on. Plus, if science is going to get less conservative and more wacky, we need to think about insulating the more outré research from public misunderstanding or even misuse – we’ll have to get better at communicating and contextualising scientific data.

These are all extremely tentative suggestions. A lot of work needs to be done to reimagine the institutions that control, shape and enable science to thrive. But first we need to recognise that our very romanticism about mavericks might be the thing that’s holding us back from unearthing more audacious ideas. You shouldn’t have to be a maverick in order think like one: to be speculative, weird and risky. In some areas, maverick thinking should be the status quo. We don’t need to reincarnate idiosyncratic gentlemen scholars such as Newton, Darwin and Buffon. Instead, let’s try to bake their kind of thinking into the structures of modern science.